Linux installation from source

Now we head to the most complete and developer-friendly installation method for TensorFlow. Installing from source code will allow you to learn about the different tools used for compiling.

Installing the Git source code version manager

Git is one of the most well-known source code version managers in existence, and is the one chosen by Google, publishing its code on GitHub.

In order to download the source code of TensorFlow, we will first install the Git source code manager:

Git installation in Linux (Ubuntu 16.04)

To install Git on your Ubuntu system, run the following command:

$ sudo apt-get install git

Installing the Bazel build tool

Bazel (bazel.io) is a build tool, based on the internal build tool Google has used for more than seven years, known as Blaze, and released as beta on September 9, 2015.

It is additionally used as the main build tool in TensorFlow, so in order to do some advanced tasks, a minimal knowledge of the tool is needed.

- Support for a number of languages, such as C++, Java, Python, and so on

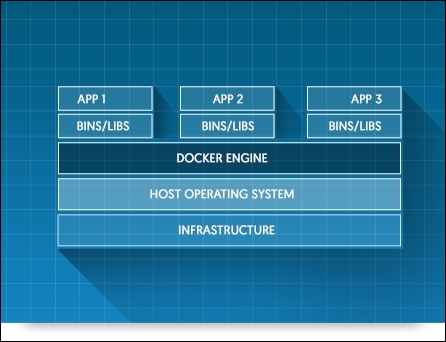

- Support for creating Android and iOS applications, and even Docker images

- Support for using libraries from many different sources, such as GitHub, Maven, and so on

- Extensiblity through an API for adding custom build rules

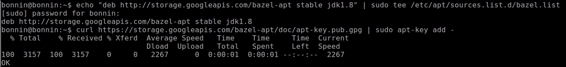

Adding the Bazel distribution URI as a package source

First we will add the Bazel repository to the list of available repositories, and its respective key to the configuration of the apt tool, which manages dependencies on the Ubuntu operating system.

$ echo "deb http://storage.googleapis.com/bazel-apt stable jdk1.8" | sudo tee /etc/apt/sources.list.d/bazel.list

$ curl https://storage.googleapis.com/bazel-apt/doc/apt-key.pub.gpg | sudo apt-key add -

Bazel installation

Updating and installing Bazel

Once we have all the package source installed, we proceed to install Bazel via apt-get:

$ sudo apt-get update && sudo apt-get install bazel

Installing GPU support (optional)

This section will teach us to install the required packages needed to have GPU support in our Linux setup.

Actually the only way to get GPU computing support is through CUDA.

Check that the nouveau NVIDIA graphic card drivers don't exist. To test this, execute the following command and check if there is any output:

lsmod | grep nouveau

If there is no output, see Installing CUDA system packages, if not, execute the following commands:

$ echo -e "blacklist nouveau\nblacklist lbm-nouveau\noptions nouveau modeset=0\nalias nouveau off\nalias lbm-nouveau off\n" | sudo tee /etc/modprobe.d/blacklist-nouveau.conf

$ echo options nouveau modeset=0 | sudo tee -a /etc/modprobe.d/nouveau-kms.conf

$ sudo update-initramfs -u

$ sudo reboot (a reboot will occur)

Installing CUDA system packages

The first step is to install the required packages from the repositories:

sudo apt-get install -y linux-source linux-headers-`uname -r`

nvidia-graphics-drivers-361

nvidia-cuda-dev

sudo apt install nvidia-cuda-toolkit

sudo apt-get install libcupti-dev

sudo apt-get install linux-image-extra-virtual

Creating alternative locations

The current TensorFlow install configurations expect a very rigid structure, so we have to prepare a similar structure on our filesystem.

Here are the commands we will need to run:

sudo mkdir /usr/local/cuda

cd /usr/local/cuda

sudo ln -s /usr/lib/x86_64-linux-gnu/ lib64

sudo ln -s /usr/include/ include

sudo ln -s /usr/bin/ bin

sudo ln -s /usr/lib/x86_64-linux-gnu/ nvvm

sudo mkdir -p extras/CUPTI

cd extras/CUPTI

sudo ln -s /usr/lib/x86_64-linux-gnu/ lib64

sudo ln -s /usr/include/ include

sudo ln -s /usr/include/cuda.h /usr/local/cuda/include/cuda.h

sudo ln -s /usr/include/cublas.h /usr/local/cuda/include/cublas.h

sudo ln -s /usr/include/cudnn.h /usr/local/cuda/include/cudnn.h

sudo ln -s /usr/include/cupti.h /usr/local/cuda/extras/CUPTI/include/cupti.h

sudo ln -s /usr/lib/x86_64-linux-gnu/libcudart_static.a /usr/local/cuda/lib64/libcudart_static.a

sudo ln -s /usr/lib/x86_64-linux-gnu/libcublas.so /usr/local/cuda/lib64/libcublas.so

sudo ln -s /usr/lib/x86_64-linux-gnu/libcudart.so /usr/local/cuda/lib64/libcudart.so

sudo ln -s /usr/lib/x86_64-linux-gnu/libcudnn.so /usr/local/cuda/lib64/libcudnn.so

sudo ln -s /usr/lib/x86_64-linux-gnu/libcufft.so /usr/local/cuda/lib64/libcufft.so

sudo ln -s /usr/lib/x86_64-linux-gnu/libcupti.so /usr/local/cuda/extras/CUPTI/lib64/libcupti.so

TensorFlow uses the additional cuDNN package to accelerate the deep neural network operations.

We will then download the cudnn package:

$ wget http://developer.download.nvidia.com/compute/redist/cudnn/v5/cudnn-7.5-linux-x64-v5.0-ga.tgz

Then we need to unzip the packages and link them:

$ sudo cp cuda/lib64/libcudnn* /usr/local/cuda/lib64

$ sudo cp cuda/include/cudnn.h /usr/local/cuda/include/

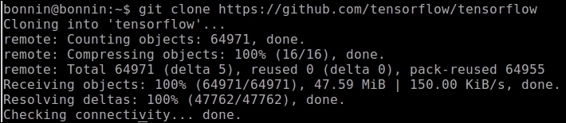

Finally, we arrive at the task of getting TensorFlow source code.

Getting it is as easy as executing the following command:

$ git clone https://github.com/tensorflow/tensorflow

Git installation

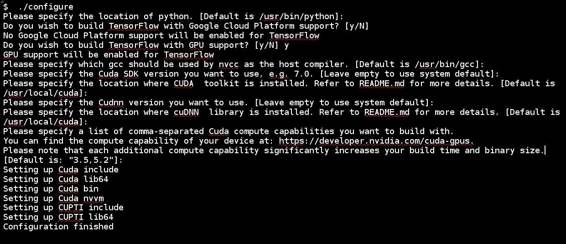

Configuring TensorFlow build

Then we access the tensorflow main directory:

$ cd tensorflow

And then we simply run the configure script:

$ ./configure

In the following figure you can see the answers to most of the questions (they are almost all enters and yes)

CUDA configuration

So we are now ready to proceed with the building of the library.

TF_UNOFFICIAL_SETTING=1 ./configure

After all the preparation steps, we will finally compile TensorFlow. The following line could get your attention because it refers to a tutorial. The reason we build the example is that it includes the base installation, and provides a means of testing if the installation worked.

Run the following command:

$ bazel build -c opt --config=cuda //tensorflow/cc:tutorials_example_trainer

Now it is time to test the installation. From the main tensorflow installation directory, just execute the following command:

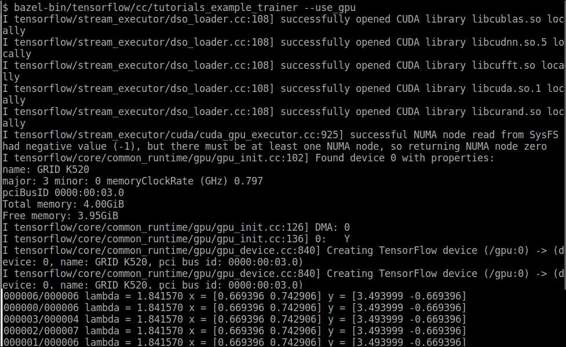

$ bazel-bin/tensorflow/cc/tutorials_example_trainer --use_gpu

This is a sample representation of the commands output:

TensorFlow GPU test