Scrapy 1.5 文档¶

这篇文档包含了所有你需要了解的关于Scrapy的知识。

获得帮助¶

遇到麻烦? 我们能提供帮助!

- 试试常见问题解答 - 它有一些常见问题的答案。

- 寻找具体信息? 试试索引或模块索引。

- 使用scrapy标记在StackOverflow中询问或搜索问题。

- 在Scrapy subreddit中询问或搜索问题。

- 搜索scrapy-users邮件列表的存档问题。

- 在#scrapy IRC channel中提问

- 在我们的问题跟踪器中用Scrapy报告错误。

第一步¶

初窥Scrapy¶

Scrapy是一种用于抓取网站和提取结构化数据的应用程序框架,可用于广泛的有用应用程序,如数据挖掘,信息处理或历史存档。

尽管Scrapy最初是为web scraping设计的,但它也可以用于使用API(如Amazon Associates Web Services)提取数据或用作通用目的的网页抓取工具。

Spider示例演示¶

为了向您展示Scrapy带来的东西,我们将通过一个Scrapy爬虫示例来向您演示运行Spider的最简单方式。

这里是一个爬虫的代码,它可以在分页之后从网站http://quotes.toscrape.com中抓取名人名言。

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com/tag/humor/',

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').extract_first(),

'author': quote.xpath('span/small/text()').extract_first(),

}

next_page = response.css('li.next a::attr("href")').extract_first()

if next_page is not None:

yield response.follow(next_page, self.parse)

把它放在一个文本文件中,命名为quotes_spider.py,然后使用runspider命令运行Spider:

scrapy runspider quotes_spider.py -o quotes.json

完成后,您将得到包含了文本和作者的JSON格式引号列表的quotes.json文件,看起来像这样(为了更好的可读性在这里重新格式化):

[{

"author": "Jane Austen",

"text": "\u201cThe person, be it gentleman or lady, who has not pleasure in a good novel, must be intolerably stupid.\u201d"

},

{

"author": "Groucho Marx",

"text": "\u201cOutside of a dog, a book is man's best friend. Inside of a dog it's too dark to read.\u201d"

},

{

"author": "Steve Martin",

"text": "\u201cA day without sunshine is like, you know, night.\u201d"

},

...]

刚刚发生了什么?¶

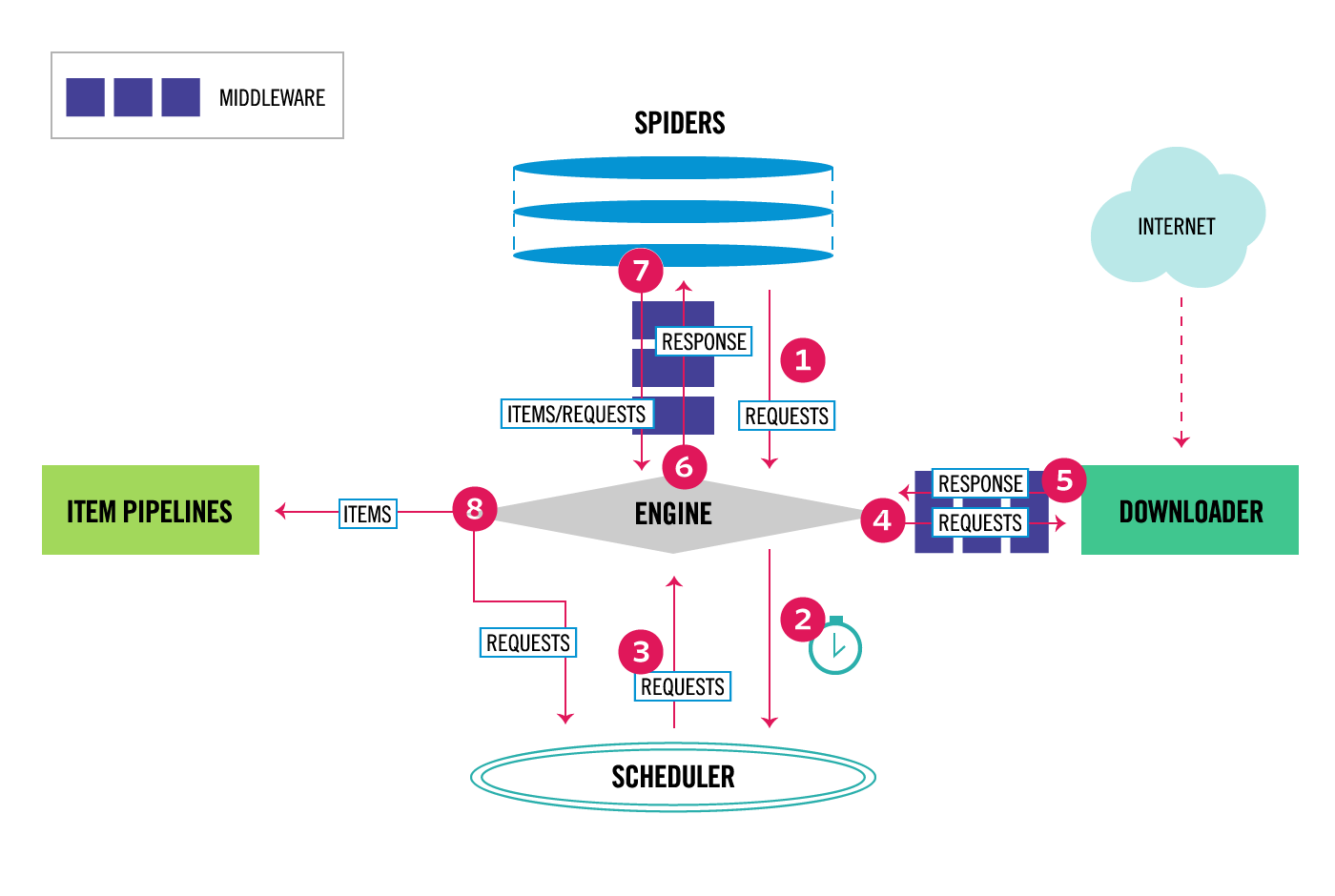

当您运行scrapy runspider quotes_spider.py命令时,Scrapy会从内部查找Spider的定义并通过它的爬虫引擎运行Spider。

爬虫通过向start_urls属性中定义的URL发送请求(本例中,仅在humor类别中引用的URL),然后调用默认回调方法 parse,将响应对象作为参数传递。 在parse回调中,我们CSS选择器循环引用元素,产生一个被提取引用的文本和作者的Python字典,查找下一页的链接并对另一个请求使用同样的parse方法作为回调。

在这里您会注意到Scrapy的一个主要优势:请求被scheduled and processed asynchronously。 这意味着Scrapy不需要等待请求处理完成,它可以同时发送另一个请求或做其他事情。 这也意味着即使某些请求失败或处理时发生错误也可以继续执行其他请求。

这不仅使您能够快速执行爬取(在容错方式下同时发送多个并发请求),Scrapy还可以使你通过a few settings来优雅的控制爬虫。 您可以执行诸如在每个请求之间设置下载延迟,限制每个域或每个IP的并发请求数量,甚至using an auto-throttling extension尝试自动调节这些延迟。

Note

这里使用了feed exports生成JSON文件,您可以轻易更改导出格式(例如XML或CSV)或存储后端(例如FTP或Amazon S3)。 您还可以编写item pipeline将项目存储在数据库中。

还有什么?¶

您已经看过如何使用Scrapy从网站中提取和存储信息,但这只是表面。 Scrapy提供了许多强大的功能,可以使抓取变得简单高效,例如:

- 内置支持使用扩展CSS选择器和XPath表达式从HTML/XML源selecting and extracting数据,使用正则表达式作为辅助方法进行提取。

- 一个交互式shell控制台(IPython软件)用于尝试使用CSS和XPath表达式来抓取数据,这在编写或调试蜘蛛程序时非常有用。

- 内置支持导出多种格式(JSON,CSV,XML),也可将它们存储在多种后端(FTP,S3,本地文件系统)。

- 强大的编码支持和自动检测,用于处理外部的,非标准的和破损的编码声明。

- 强大的可扩展性支持,允许您使用signals和定义良好的API(middlewares,extensions和pipelines)。

- 广泛的内置扩展和中间件处理:

- cookies和会话处理

- HTTP特性,如压缩,认证,缓存

- 用户代理欺骗

- robots.txt

- 爬行深度限制

- 更多

- 一个Telnet控制台,用于连接Scrapy进程中运行的Python控制台,以反编译和调试您的爬虫程序

- 还有其他有用的东西,如可重复使用的用于抓取站点地图和XML/CSV中网站的spider,与抓取的信息关联的自动下载图片(或任何其他媒体)的媒体管道,缓存DNS解析器,等等!

安装指南¶

安装Scrapy ¶

Scrapy在Python 2.7和Python 3.4以上运行,在CPython(默认Python实现)和PyPy(从PyPy 5.9开始)下运行。

如果您使用Anaconda或Miniconda,可以从conda-forge渠道安装软件包,该软件包具有最新的软件包适用于Linux,Windows和OS X.

要使用conda安装Scrapy,请运行:

conda install -c conda-forge scrapy

或者,如果您已经熟悉Python包的安装,则可以使用PyPI安装Scrapy及其依赖项:

pip install Scrapy

请注意,有时这可能需要根据您的操作系统解决某些Scrapy依赖项的编译问题,因此请务必检查平台特定安装说明。

我们强烈建议您在专用的virtualenv中安装Scrapy,以避免与系统软件包冲突。

有关更详细的平台特定说明,请继续阅读。

有用的知识¶

Scrapy是用纯Python编写的,并且依赖于几个关键的Python包:

- lxml,一种高效的XML和HTML解析器

- parsel,一个基于lxml的HTML/XML数据提取库

- w3lib,一款用于处理网址和网页编码的多用途帮手

- twisted,一个异步网络框架

- cryptography和pyOpenSSL,用来处理各种网络级安全需求

已测试过Scrapy的最低版本是:

- Twisted 14.0

- lxml 3.4

- pyOpenSSL 0.14

Scrapy可能会使用这些软件包的旧版本,但不能保证它会继续工作,因为它没有经过测试。

其中一些软件包本身依赖于非Python包,这可能需要额外的安装步骤,具体取决于您的平台。 请查阅平台特性指南。

如果出现依赖关系相关的任何问题,请参阅其各自的安装说明:

使用虚拟环境(推荐)¶

TL; DR:我们建议将Scrapy安装在所有平台的虚拟环境中。

Python软件包既可以全局安装(也可以是系统范围),也可以安装在用户空间中。 我们不建议在系统范围安装scrapy。

相反,我们建议您在所谓的“虚拟环境”(virtualenv)中安装scrapy。

Virtualenvs可以使Scrapy不会与已安装的Python系统软件包发生冲突(这可能会破坏您的一些系统工具和脚本),仍然可以正常使用pip(不需要sudo等)。

为从虚拟环境开始,参照虚拟环境指南. 要全局安装它(全局安装在这里实际上有帮助),将会被运行:

$ [sudo] pip install virtualenv

请查看用户指南了解如何创建您的virtualenv。

Note

如果您使用Linux或OS X,virtoalenvwrapper是创建virtualenvs的便利工具。

一旦你创建了一个virtualenv,你就可以像安装任何其他Python包一样,通过pip安装scrapy。

(您可能需要事先安装非Python依赖关系,请参阅下面的平台特定指南)。

创建Python virtualenvs可以使用Python 2或Python 3作为默认。

- 如果你想用Python 3安装scrapy,请在Python 3 virtualenv中安装scrapy。

- 如果你想用Python 2安装scrapy,请在Python 2 virtualenv中安装scrapy。

平台特定的安装说明¶

Windows¶

尽管可以使用pip在Windows上安装Scrapy,但我们建议您安装Anaconda或Miniconda,并使用conda-forge渠道中的软件包,这将避免大多数安装问题。

当你安装Anaconda或Miniconda完成之后,安装Scrapy:

conda install -c conda-forge scrapy

Ubuntu 14.04或更高版本¶

Scrapy 目前在测试最新版本的lxml,twisted和pyOpenSSL,并且在与最新的Ubuntu发行版本兼容 同时也支持老版本的Ubuntu,比如Ubuntu 14.04,尽管TLS有潜在问题

不要 使用 python-scrapy 提供自Ubuntu, 太老同时速度太慢,无法赶上最新的Scrapy

为了安装scrapy在Ubuntu或者基于Ubuntu的系统,你需要安装以下依赖包

sudo apt-get install python-dev python-pip libxml2-dev libxslt1-dev zlib1g-dev libffi-dev libssl-dev

python-dev,zlib1g-dev,libxml2-dev和libxslt1-dev依赖于lxmllibssl-dev和libffi-dev要求安装cryptography

如果你想在python 3上安装scrapy,你同时需要安装python 3

sudo apt-get install python3 python3-dev

在 虚拟环境中:virtualenv, 你可以用以下 pip 安装Scrapy:

pip install scrapy

Note

同样无python依赖包可被用于安装Scrapy在Debian Jessie8.0及更高版本

Mac OS X¶

构建Scrapy的依赖需要存在C编译器和开发头文件。 在OS X上,这通常由Apple的Xcode开发工具提供。 要安装Xcode命令行工具,请打开一个终端窗口并运行:

xcode-select --install

已知问题可能会阻止pip更新系统包。 必须解决这个问题才能成功安装Scrapy及其依赖项。 以下是一些建议的解决方案

(推荐) 不要使用系统python,安装一个不会与系统其余部分冲突的新的更新版本。 下面介绍了如何使用 homebrew 软件包管理器:

按照https://brew.sh/中的说明安装homebrew

更新你的

PATH变量,应该在系统包之前声明homebrew包(如果你使用 zsh作为默认shell,将.bashrc改为.zshrc):echo "export PATH=/usr/local/bin:/usr/local/sbin:$PATH" >> ~/.bashrc

重新加载

.bashrc以确保更改发生:source ~/.bashrc

安装python:

brew install pythonPython的最新版本已经捆绑了

pip,所以你不需要单独安装它。 如果情况并非如此,请升级python:brew update; brew upgrade python

(可选)在隔离的python环境中安装Scrapy。

此方法是上述OS X问题的一种解决方法,也是管理依赖关系的良好实践,可以作为第一种方法的补充。

virtualenv是一个可用于在python中创建虚拟环境的工具。 我们建议阅读http://docs.python-guide.org/en/latest/dev/virtualenvs/这个教程入门。

在以上任一方法完成后,您应该能够安装Scrapy:

pip install Scrapy

PyPy¶

我们建议使用最新的PyPy版本。 测试版本是5.9.0。 对于PyPy3,仅测试了Linux安装。

大多数scrapy依赖现在都有用于CPython的二进制转义,但不适用于PyPy。

这意味着这些依赖将在安装过程中建立。

在OS X上,您可能会遇到构建密码依赖性的问题,此问题的解决方案在这里描述,即brew install openssl,然后导出此命令推荐的标志(仅在安装scrapy时需要)。 除了安装构建依赖关系之外,在Linux上安装没有特殊问题。

在Windows上使用PyPy安装scrapy未经测试。

你可以通过运行以下指令来检查scrapy是否安装 scrapy bench.

如果这条指令给与以下错误

TypeError: ... got 2 unexpected keyword arguments,这意味着安装工具不能获取 PyPy-specific依赖包。

为解决此问题,运行以下指令pip install 'PyPyDispatcher>=2.1.0'.

Scrapy教程¶

在本教程中,我们假定您的系统上已经安装了Scrapy。 如果不是这种情况,请参阅安装指南。

我们将爬取quota.toscrape.com,一个列出著名作家语录的网站。

本教程将引导您完成这些任务:

- 创建一个新的Scrapy项目

- 编写一个spider来抓取网站并提取数据

- 使用命令行导出爬取的数据

- 更改spider递归地跟随链接

- 使用spider参数

Scrapy是用Python编写的。 如果您对语言很陌生,您可能想先了解语言是什么样子,以充分利用Scrapy。

如果您已经熟悉其他语言,希望快速学习Python,我们建议您阅读Dive Into Python 3。 或者,您可以按照Python教程进行操作。

如果您是编程新手,想从Python开始,那么在线书籍Learn Python The Hard Way将对您非常有用。 你也可以看看非程序员的Python资源列表。

创建一个项目¶

在开始抓取之前,您不得不建立一个新的Scrapy项目。 进入您想要存储代码并运行的目录:

scrapy startproject tutorial

这将创建一个包含以下内容的tutorial目录:

tutorial/

scrapy.cfg # 部署配置文件

tutorial/ # 项目的Python模块,你将在这里输入你的代码

__init__.py

items.py # 项目的items定义文件

middlewares.py # 项目中间件文件

pipelines.py # 项目管道文件

settings.py # 项目设置文件

spiders/ # 稍后放置spider的文件夹

__init__.py

我们的第一个Spider¶

Spider是你定义的类,并且Scrapy用它从网站(或一组网站)爬取信息。 它们继承自scrapy.Spider,定义初始请求,可选择如何跟随页面中的链接,以及如何解析下载的页面内容以提取数据。

这是我们第一个Spider的代码。 将它保存在项目中tutorial/spiders目录下名为quotes_spider.py的文件中:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

def start_requests(self):

urls = [

'http://quotes.toscrape.com/page/1/',

'http://quotes.toscrape.com/page/2/',

]

for url in urls:

yield scrapy.Request(url=url, callback=self.parse)

def parse(self, response):

page = response.url.split("/")[-2]

filename = 'quotes-%s.html' % page

with open(filename, 'wb') as f:

f.write(response.body)

self.log('Saved file %s' % filename)

正如你所看到的,我们的Spider继承自scrapy.Spider,定义了一些属性和方法:

name:标识Spider。 它在项目中必须是唯一的,也就是说,不能为不同的Spider设置相同的名称。start_requests():必须提供一个Spider开始抓取的迭代请求(你可以返回一个请求列表或者编写一个生成器函数)。 随后的请求将从这些初始请求中接连生成。parse():一个用来处理每个请求下载的响应的方法。 response参数是TextResponse的一个实例,它包含了页面内容以便进一步处理。parse()方法通常会解析response,将抓到的数据提取为字典,同时找出接下来新的URL创建新的请求(Request)。

如何运行我们的spider¶

为了让我们的spider工作,请转到项目的顶层目录并运行:

scrapy crawl quotes

这个命令运行我们刚刚添加名为quotes的spider,它将发送一些针对quotes.toscrape.com域的请求。 你会得到类似于这样的输出:

... (omitted for brevity)

2016-12-16 21:24:05 [scrapy.core.engine] INFO: Spider opened

2016-12-16 21:24:05 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2016-12-16 21:24:05 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2016-12-16 21:24:05 [scrapy.core.engine] DEBUG: Crawled (404) <GET http://quotes.toscrape.com/robots.txt> (referer: None)

2016-12-16 21:24:05 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://quotes.toscrape.com/page/1/> (referer: None)

2016-12-16 21:24:05 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://quotes.toscrape.com/page/2/> (referer: None)

2016-12-16 21:24:05 [quotes] DEBUG: Saved file quotes-1.html

2016-12-16 21:24:05 [quotes] DEBUG: Saved file quotes-2.html

2016-12-16 21:24:05 [scrapy.core.engine] INFO: Closing spider (finished)

...

现在,检查当前目录中的文件。 您应该注意到创建了两个新文件:quotes-1.html和quotes-2.html,其中包含各个网址的内容,就像parse方法指示的那样。

Note

如果您想知道为什么我们还没有解析HTML,请坚持下去,我们很快就会涉及。

发生了什么?¶

Scrapy调度Spider的start_requests方法返回的scrapy.Request对象。 在收到每个响应后,它会实例化Response对象并调用与请求相关的回调方法(本例中为parse方法)将响应作为参数传递。

start_requests方法的快捷方式¶

除了实现从网址生成scrapy.Request对象的start_requests()方法外,您还可以定义一个包含网址列表的start_urls类属性。 这个列表将被默认的start_requests()用来为你的spider创建初始请求:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com/page/1/',

'http://quotes.toscrape.com/page/2/',

]

def parse(self, response):

page = response.url.split("/")[-2]

filename = 'quotes-%s.html' % page

with open(filename, 'wb') as f:

f.write(response.body)

parse()方法将会被调用来处理这些URL的每个请求,即使我们没有明确告诉Scrapy这样做。 发生这种情况是因为在没有为request明确分配回调方法时,parse()是Scrapy的默认回调方法。

提取数据¶

学习如何使用Scrapy提取数据的最佳方式是尝试使用shell选择器Scrapy shell。 运行:

scrapy shell 'http://quotes.toscrape.com/page/1/'

注意

请记住,从命令行运行Scrapy shell时应该将url用引号括起来,否则包含参数的url(例如 & 字符) 将出现问题。

在Windows上,请使用双引号:

scrapy shell "http://quotes.toscrape.com/page/1/"

你会看到类似于:

[ ... Scrapy log here ... ]

2016-09-19 12:09:27 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://quotes.toscrape.com/page/1/> (referer: None)

[s] Available Scrapy objects:

[s] scrapy scrapy module (contains scrapy.Request, scrapy.Selector, etc)

[s] crawler <scrapy.crawler.Crawler object at 0x7fa91d888c90>

[s] item {}

[s] request <GET http://quotes.toscrape.com/page/1/>

[s] response <200 http://quotes.toscrape.com/page/1/>

[s] settings <scrapy.settings.Settings object at 0x7fa91d888c10>

[s] spider <DefaultSpider 'default' at 0x7fa91c8af990>

[s] Useful shortcuts:

[s] shelp() Shell help (print this help)

[s] fetch(req_or_url) Fetch request (or URL) and update local objects

[s] view(response) View response in a browser

>>>

使用shell,您可以在响应对象中使用CSS选择元素:

>>> response.css('title')

[<Selector xpath='descendant-or-self::title' data='<title>Quotes to Scrape</title>'>]

运行response.css('title')的结果是一个名为SelectorList的列表对象,它表示一个包裹着XML/HTML元素的Selector对象的列表,并允许您运行更多查询来细化选择或提取数据。

要从上述title中提取文本,您可以执行以下操作:

>>> response.css('title::text').extract()

['Quotes to Scrape']

这里需要注意两点:其一是我们已经向CSS查询添加了:: text,这意味着我们只想直接在<title>中选择text元素。 如果我们不指定:: text,我们会得到完整的标题元素,包括它的标签:

>>> response.css('title').extract()

['<title>Quotes to Scrape</title>']

另一件事是调用.extract()的结果是一个列表,因为我们正在处理SelectorList的实例。 如果你只是想要第一个结果,在这种情况下,你可以这样做:

>>> response.css('title::text').extract_first()

'Quotes to Scrape'

或者,你可以这样写:

>>> response.css('title::text')[0].extract()

'Quotes to Scrape'

但是,如果找不到与选择匹配的任何元素,使用.extract_first()可避免发生IndexError并返回None。

这里有一个教训:对于大多数爬虫代码,你希望它能够适应在页面上找不到的东西而产生的错误,所以即使某些部分没有被爬取,你至少可以得到一部分数据。

除了extract()和extract_first()方法之外,还可以使用re()方法应用正则表达式提取:

>>> response.css('title::text').re(r'Quotes.*')

['Quotes to Scrape']

>>> response.css('title::text').re(r'Q\w+')

['Quotes']

>>> response.css('title::text').re(r'(\w+) to (\w+)')

['Quotes', 'Scrape']

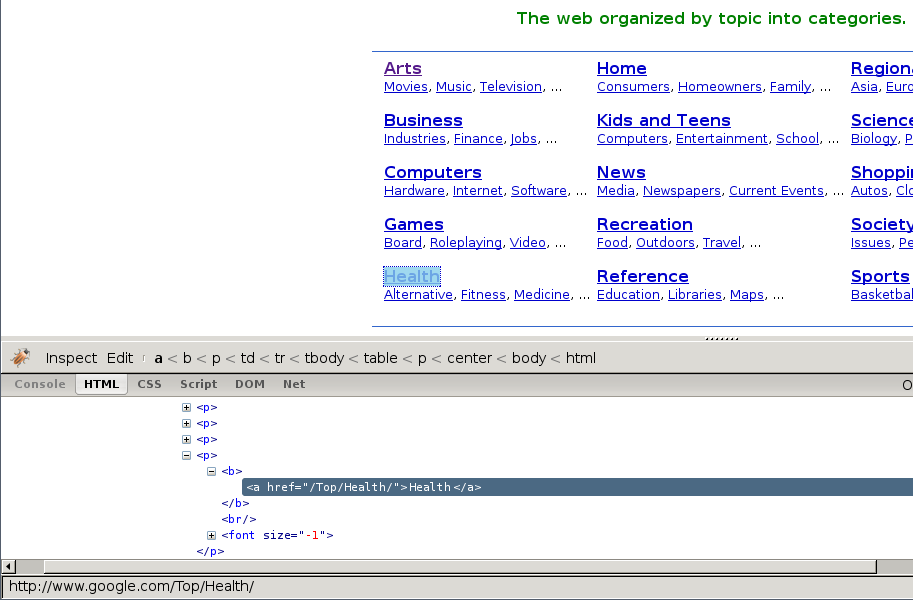

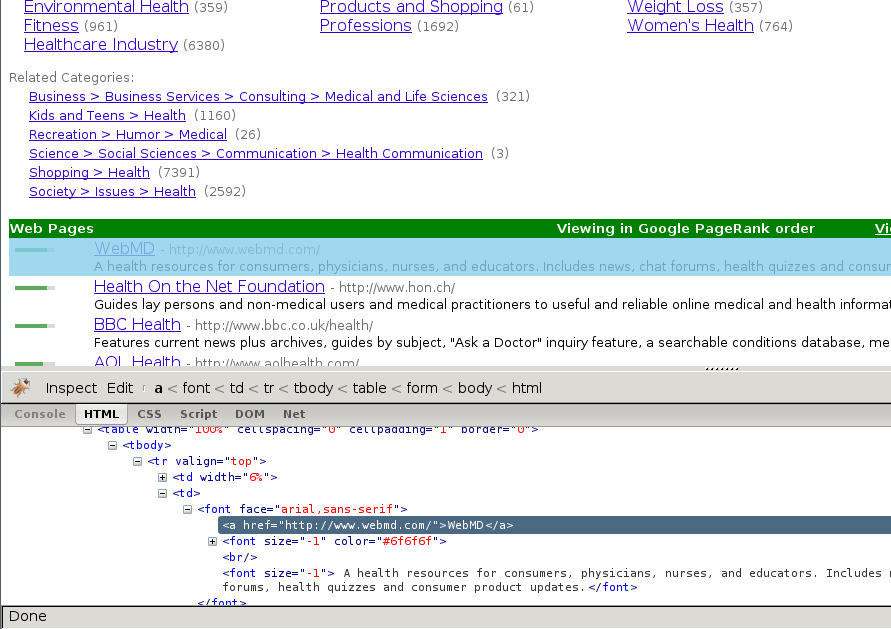

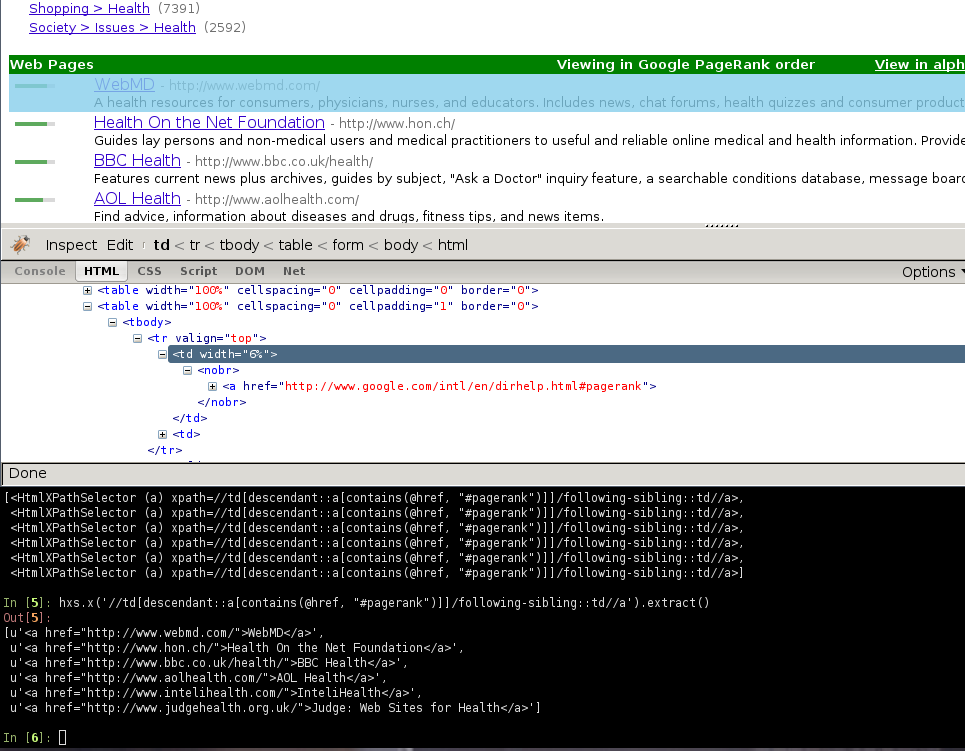

为了找到合适的CSS选择器来使用,你可能会发现在你的web浏览器的shell中使用view(response)打开响应页面很有用。

您可以使用浏览器开发工具或Firebug扩展(请参阅使用Firebug进行爬取和使用Firefox进行爬取部分)。

选择器小工具也是一个很好的工具,可以快速查找可视化选定元素的CSS选择器,它可以在许多浏览器中使用。

XPath:简要介绍¶

>>> response.xpath('//title')

[<Selector xpath='//title' data='<title>Quotes to Scrape</title>'>]

>>> response.xpath('//title/text()').extract_first()

'Quotes to Scrape'

XPath表达式非常强大,是Scrapy选择器的基础。 实际上,CSS选择器在底层被转换为XPath。 如果仔细阅读shell中选择器对象的文本表示形式,你可以发现这一点。

尽管XPath表达式可能不如CSS选择器那么受欢迎,但它提供了更加强大的功能,除了浏览结构之外,它还可以查看内容。 使用XPath,您可以像这样选择内容:选择包含文本“下一页”的链接。 这使得XPath非常适合抓取任务,并且即使您已经知道如何构建CSS选择器,我们也鼓励您学习XPath,这会使抓取更容易。

我们在这里不会涉及很多XPath,但您可以阅读有关使用XPath和Scrapy选择器的更多信息。 想要了解更多XPath的信息,我们建议通过示例学习XPath和学习“如何用XPath思考”。

提取语录和作者¶

现在您已经了解了一些关于选择和提取的内容,让我们通过编写代码来从网页中提取语录来完成我们的spider。

http://quotes.toscrape.com中的每个语录都是由HTML元素表示的,如下所示:

<div class="quote">

<span class="text">“The world as we have created it is a process of our

thinking. It cannot be changed without changing our thinking.”</span>

<span>

by <small class="author">Albert Einstein</small>

<a href="/author/Albert-Einstein">(about)</a>

</span>

<div class="tags">

Tags:

<a class="tag" href="/tag/change/page/1/">change</a>

<a class="tag" href="/tag/deep-thoughts/page/1/">deep-thoughts</a>

<a class="tag" href="/tag/thinking/page/1/">thinking</a>

<a class="tag" href="/tag/world/page/1/">world</a>

</div>

</div>

让我们打开scrapy shell,试着提取我们想要的数据:

$ scrapy shell 'http://quotes.toscrape.com'

我们通过以下方式获得语录HTML元素的选择器列表:

>>> response.css("div.quote")

上述查询返回的每个选择器都允许我们对其子元素运行更多查询。 让我们将第一个选择器分配给一个变量,以便我们可以直接在特定的语录上运行我们的CSS选择器:

>>> quote = response.css("div.quote")[0]

现在,我们从刚刚创建的quote对象中提取title,author和tags

>>> title = quote.css("span.text::text").extract_first()

>>> title

'“The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.”'

>>> author = quote.css("small.author::text").extract_first()

>>> author

'Albert Einstein'

鉴于标签是一个字符串列表,我们可以使用.extract()方法来获取所有这些标签:

>>> tags = quote.css("div.tags a.tag::text").extract()

>>> tags

['change', 'deep-thoughts', 'thinking', 'world']

在弄清楚了如何提取每一个数据之后,我们现在可以遍历所有语录元素,将它们放在一起形成一个Python字典:

>>> for quote in response.css("div.quote"):

... text = quote.css("span.text::text").extract_first()

... author = quote.css("small.author::text").extract_first()

... tags = quote.css("div.tags a.tag::text").extract()

... print(dict(text=text, author=author, tags=tags))

{'tags': ['change', 'deep-thoughts', 'thinking', 'world'], 'author': 'Albert Einstein', 'text': '“The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.”'}

{'tags': ['abilities', 'choices'], 'author': 'J.K. Rowling', 'text': '“It is our choices, Harry, that show what we truly are, far more than our abilities.”'}

... a few more of these, omitted for brevity

>>>

在我们的spider中提取数据¶

让我们回到我们的spider。 直到现在,它并没有特别提取任何数据,只是将整个HTML页面保存到本地文件中。 让我们将提取逻辑整合到我们的spider中。

Scrapy spider通常会生成许多包含从页面提取的数据的字典。 为此,我们在回调中使用yield Python关键字,如下所示:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com/page/1/',

'http://quotes.toscrape.com/page/2/',

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').extract_first(),

'author': quote.css('small.author::text').extract_first(),

'tags': quote.css('div.tags a.tag::text').extract(),

}

如果你运行这个spider,它会输出提取的数据和日志:

2016-09-19 18:57:19 [scrapy.core.scraper] DEBUG: Scraped from <200 http://quotes.toscrape.com/page/1/>

{'tags': ['life', 'love'], 'author': 'André Gide', 'text': '“It is better to be hated for what you are than to be loved for what you are not.”'}

2016-09-19 18:57:19 [scrapy.core.scraper] DEBUG: Scraped from <200 http://quotes.toscrape.com/page/1/>

{'tags': ['edison', 'failure', 'inspirational', 'paraphrased'], 'author': 'Thomas A. Edison', 'text': "“I have not failed. I've just found 10,000 ways that won't work.”"}

存储抓取的数据¶

存储抓取数据的最简单方法是应用Feed exports,使用以下命令:

scrapy crawl quotes -o quotes.json

这将生成一个quotes.json文件,其中包含所有序列化为JSON的已抓取项目。

由于历史原因,Scrapy附加到给定文件而不是覆盖其内容。 如果您执行该命令两次在第二次执行前未移除该文件,则会生成一个损坏的JSON文件。

您也可以使用其他格式,如JSON:

scrapy crawl quotes -o quotes.jl

JSON行格式非常有用,因为它类似于流,您可以轻松地向其添加新记录。 当您运行两次时,它不会发生和JSON相同的问题。 另外,由于每条记录都是一条独立的行,因此可以处理大文件而不必将所有内容都放在内存中,因此有些工具(如JQ)可帮助在命令行执行此操作。

在小型项目中(如本教程中的),应该足够了。

但是,如果您想使用爬虫项目执行更复杂的事情,可以编写Item Pipeline。 项目创建时,已经为您设置了Item Pipelines的预留文件,位于tutorial/pipelines.py中。 尽管如果你只是想存储抓取的数据,你不需要实现任何的item pipeline。

找到下个链接¶

比方说,您不需要从http://quotes.toscrape.com中的前两个页面中抓取内容,而是需要来自网站中所有页面的格言。

现在您已经知道如何从网页中提取数据,我们来看看如何从它们中找到链接。

首先要提取我们想要继续处理的页面的链接。 检视我们的页面,我们可以看到有一个带有标记的下一页的链接:

<ul class="pager">

<li class="next">

<a href="/page/2/">Next <span aria-hidden="true">→</span></a>

</li>

</ul>

我们可以尝试在shell中提取它:

>>> response.css('li.next a').extract_first()

'<a href="/page/2/">Next <span aria-hidden="true">→</span></a>'

这会获取锚点元素,但我们需要属性href。 为此,Scrapy支持一个CSS扩展,让您选择属性内容,如下所示:

>>> response.css('li.next a::attr(href)').extract_first()

'/page/2/'

现在让我们看看将Spider修改为递归地follow到下一页的链接,从中提取数据:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com/page/1/',

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').extract_first(),

'author': quote.css('small.author::text').extract_first(),

'tags': quote.css('div.tags a.tag::text').extract(),

}

next_page = response.css('li.next a::attr(href)').extract_first()

if next_page is not None:

next_page = response.urljoin(next_page)

yield scrapy.Request(next_page, callback=self.parse)

现在,在提取数据后,parse()方法查找到下一页的链接,使用urljoin()方法构建完整的绝对URL(因为链接可以是相对的),并产生一个新的请求到下一个页面,将自己作为回调函数来处理下一页的数据提取,并保持遍历所有页面的抓取。

在这里您将看到Scrapy的跟随链接机制:当您在回调方法中产生请求时,Scrapy会安排发送请求并注册一个回调方法,以便在请求结束时执行。

使用这种方法,您可以根据您定义的规则构建复杂的抓取工具,并根据所访问的页面提取不同类型的数据。

在我们的例子中,它创建了一个循环,找下一页的所有链接,直到它找不到。这种做法对于抓取分页的博客,论坛和其他网站的链接是很方便的。

创建请求的快捷方式¶

您可以使用response.follow作为创建Request对象的快捷方式:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com/page/1/',

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').extract_first(),

'author': quote.css('span small::text').extract_first(),

'tags': quote.css('div.tags a.tag::text').extract(),

}

next_page = response.css('li.next a::attr(href)').extract_first()

if next_page is not None:

yield response.follow(next_page, callback=self.parse)

与scrapy.Request不同,response.follow直接支持相对URL - 无需调用urljoin。 请注意,response.follow只是返回一个Request实例;你仍然需要产生这个请求。

您也可以将选择器传递给response.follow代替字符串;该选择器应该提取必要的属性:

for href in response.css('li.next a::attr(href)'):

yield response.follow(href, callback=self.parse)

对于<a>元素有一个快捷方式:response.follow会自动使用它们的href属性。 因此代码可以进一步缩短:

for a in response.css('li.next a'):

yield response.follow(a, callback=self.parse)

注意

response.follow(response.css('li.next a'))无效,因为response.css返回一个包含所有结果选择器的类似列表的对象,而不是单个选择器。 如上例所示的for循环,或response.follow(response.css('li.nexta')[0])是可以的。

更多的例子和模式¶

这有另一个spider,它演示了回调和跟随链接,这次是为了抓取作者信息:

import scrapy

class AuthorSpider(scrapy.Spider):

name = 'author'

start_urls = ['http://quotes.toscrape.com/']

def parse(self, response):

# follow links to author pages

for href in response.css('.author + a::attr(href)'):

yield response.follow(href, self.parse_author)

# follow pagination links

for href in response.css('li.next a::attr(href)'):

yield response.follow(href, self.parse)

def parse_author(self, response):

def extract_with_css(query):

return response.css(query).extract_first().strip()

yield {

'name': extract_with_css('h3.author-title::text'),

'birthdate': extract_with_css('.author-born-date::text'),

'bio': extract_with_css('.author-description::text'),

}

这个spider将从主页面开始,它将follow所有作者页面的链接,对每个页面调用parse_author回调函数,同时也像我们之前看到的那样对页面链接使用parse回调。

在这里,我们将回调传递给response.follow作为位置参数以缩短代码长度;也可以使用scrapy.Request。

parse_author回调函数定义了一个从CSS查询中提取和清除数据的辅助函数,生成带有作者数据的Python字典。

这个spider演示的另一个有趣的事情是,即使多个语录有同一作者,我们也不必担心多次访问相同的作者页面。 默认情况下,Scrapy会将重复的请求过滤到已访问的URL中,避免因同一编程错误而导致服务器遇到过多的重复问题。 这可以通过设置DUPEFILTER_CLASS进行配置。

希望现在您已经对如何使用Scrapy的follow链接和回调机制有了足够的了解。

作为利用follow链接机制的另一个示例spider,请查看CrawlSpider类,以获得一个实现了简单规则引擎的通用蜘蛛,您可以在其上编写爬虫程序。

此外,常见模式是使用将附加数据传递到回调函数的技巧,对多个页面构建一个包含数据的item。

使用spider参数¶

您可以在命令行运行spider时使用-a选项提供参数:

scrapy crawl quotes -o quotes-humor.json -a tag=humor

这些参数被传递给Spider的__init__方法,并默认成为spider的属性。

在此示例中,为tag参数提供的值可通过self.tag获得。 你可以使用它来让你的spider获取带有特定标签的语录,根据参数构建URL:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

def start_requests(self):

url = 'http://quotes.toscrape.com/'

tag = getattr(self, 'tag', None)

if tag is not None:

url = url + 'tag/' + tag

yield scrapy.Request(url, self.parse)

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').extract_first(),

'author': quote.css('small.author::text').extract_first(),

}

next_page = response.css('li.next a::attr(href)').extract_first()

if next_page is not None:

yield response.follow(next_page, self.parse)

如果您将tag=humor参数传递给spider,您会注意到它只会访问humor标记中的网址,例如http//quotes.toscrape.com/tag/humor 。

您可以详细了解如何处理spider参数。

示例¶

学习的最好方法是使用示例,Scrapy也不例外。 出于这个原因,这里有名为quotesbot的Scrapy项目的例子,您可以使用它来玩和学习更多关于Scrapy的知识。 它包含两个http://quotes.toscrape.com的spider,一个使用CSS选择器,另一个使用XPath表达式。

quotesbot项目位于:https://github.com/scrapy/quotesbot。 您可以在项目的README中找到更多关于它的信息。

如果你熟悉git,你可以签出代码。 否则,您可以通过单击此处下载该项目的zip文件。

基本概念¶

命令行工具¶

0.10版本中的新功能。

Scrapy通过scrapy命令行工具进行控制,在这里被称为“Scrapy工具”,以区别于我们称之为“命令”或“Scrapy命令”的子命令。

Scrapy工具提供了多种命令,用于多种目的,并且每个命令都接受一组不同的参数和选项。

(scrapy deploy命令已在1.0版中被移除,变为独立的scrapyd-deploy。 请参阅部署项目。)

配置设置¶

Scrapy将在标准位置的ini类型scrapy.cfg文件中查找配置参数:

/etc/scrapy.cfg或c:\scrapy\scrapy.cfg(系统范围),〜/.config/scrapy.cfg($XDG_CONFIG_HOME)和〜/ .scrapy.cfg($HOME)用于全局(用户范围)设置和scrapy.cfg在scrapy项目的根目录中(请参阅下一节)。

这些文件中的设置按所列出的优先顺序进行合并:用户定义的值具有比系统范围内的默认值更高的优先级,并且在定义时,项目范围的设置将覆盖所有其他文件。

Scrapy也可以通过一些环境变量进行配置。 目前可设置:

SCRAPY_SETTINGS_MODULE(请参阅指定设置)SCRAPY_PROJECTSCRAPY_PYTHON_SHELL(请参阅Scrapy shell)

Scrapy项目的默认结构¶

在深入研究命令行工具及其子命令之前,我们先来了解Scrapy项目的目录结构。

虽然可以修改,但所有Scrapy项目默认具有相同的文件结构,与此类似:

scrapy.cfg

myproject/

__init__.py

items.py

middlewares.py

pipelines.py

settings.py

spiders/

__init__.py

spider1.py

spider2.py

...

scrapy.cfg文件所在的目录称为项目根目录。 该文件包含定义项目设置的python模块的名称。 这里是一个例子:

[settings]

default = myproject.settings

使用scrapy工具¶

您可以在开始时运行不带参数的Scrapy工具,它将打印一些使用帮助和可用的命令:

Scrapy X.Y - no active project

Usage:

scrapy <command> [options] [args]

Available commands:

crawl Run a spider

fetch Fetch a URL using the Scrapy downloader

[...]

如果您在Scrapy项目中,则第一行将打印当前活动的项目。 在这个例子中,它是在一个项目之外运行的。 如果在一个项目中运行,它会打印出如下所示的内容:

Scrapy X.Y - project: myproject

Usage:

scrapy <command> [options] [args]

[...]

创建项目¶

您通常使用scrapy工具做的第一件事是创建您的Scrapy项目:

scrapy startproject myproject [project_dir]

这将在project_dir目录下创建一个Scrapy项目。

如果未指定project_dir,则project_dir将与myproject相同。

接下来,进入新的项目目录:

cd project_dir

您可以使用scrapy命令管理和控制您的项目。

可用的工具命令¶

本节包含可用内置命令的列表及说明和一些使用示例。 请记住,您始终可以通过运行以下命令获取有关每个命令的更多信息:

scrapy <command> -h

你可以看到所有可用的命令:

scrapy -h

有两种类型的命令,一些只能在Scrapy项目中使用的命令(特定于项目的命令);另外一些可以在没有活动的Scrapy项目的情况下使用(全局命令),尽管它们在项目中运行时可能略有不同因为他们会使用项目重写设置)。

全局命令:

仅限项目的命令:

startproject命令¶

- 语法:

scrapy startproject <project_name> [project_dir] - 需要项目:不需要

在project_dir目录下创建一个名为project_name的新Scrapy项目。

如果未指定project_dir,project_dir将与project_name相同。

用法示例:

$ scrapy startproject myproject

genspider¶

- 语法:

scrapy genspider [-t template] <name> <domain> - 需要项目:不需要

如果在项目中调用,则在当前文件夹或当前项目的spiders文件夹中创建一个新Spider。 <name> 参数用来设置Spider的 name, <domain>设置allowed_domains 和 start_urls .

用法示例:

$ scrapy genspider -l

Available templates:

basic

crawl

csvfeed

xmlfeed

$ scrapy genspider example example.com

Created spider 'example' using template 'basic'

$ scrapy genspider -t crawl scrapyorg scrapy.org

Created spider 'scrapyorg' using template 'crawl'

这只是一个用预定义模板创建Spider的快捷命令,并不是创建Spider的唯一方法。 您可以自己创建Spider源代码文件,不使用此命令。

crawl¶

- 语法:

scrapy crawl <spider> - 需要项目:需要

开始使用Spider爬取。

用法示例:

$ scrapy crawl myspider

[ ... myspider starts crawling ... ]

check¶

- 语法:

scrapy check [-l] <spider> - 需要项目:需要

运行约定检查。

用法示例:

$ scrapy check -l

first_spider

* parse

* parse_item

second_spider

* parse

* parse_item

$ scrapy check

[FAILED] first_spider:parse_item

>>> 'RetailPricex' field is missing

[FAILED] first_spider:parse

>>> Returned 92 requests, expected 0..4

edit¶

- 语法:

scrapy edit <spider> - 需要项目:需要

使用EDITOR环境变量中定义的编辑器编辑给定的蜘蛛,或者(如果未设置)编辑EDITOR设置。

此命令仅作为最常见情况的便捷快捷方式提供,开发人员可以自由选择任何工具或IDE来编写和调试spider。

用法示例:

$ scrapy edit spider1

fetch¶

- 语法:

scrapy fetch <url> - 需要项目:不需要

使用Scrapy下载器下载给定的URL并将内容写到标准输出。

这个命令的有趣之处在于它抓取页面Spider如何下载它。 例如,如果Spider具有覆盖用户代理的USER_AGENT属性,它将使用该属性。

所以这个命令可以用来“看”你的spider如何获取某个页面。

如果在项目之外使用,则不会应用特定Spider的行为,它将使用默认的Scrapy下载器设置。

支持的选项:

--spider=SPIDER:绕过spider自动检测并强制使用特定的spider--headers:打印响应的HTTP headers而不是响应的正文--no-redirect:不follow HTTP 3xx重定向(默认是follow它们)

用法示例:

$ scrapy fetch --nolog http://www.example.com/some/page.html

[ ... html content here ... ]

$ scrapy fetch --nolog --headers http://www.example.com/

{'Accept-Ranges': ['bytes'],

'Age': ['1263 '],

'Connection': ['close '],

'Content-Length': ['596'],

'Content-Type': ['text/html; charset=UTF-8'],

'Date': ['Wed, 18 Aug 2010 23:59:46 GMT'],

'Etag': ['"573c1-254-48c9c87349680"'],

'Last-Modified': ['Fri, 30 Jul 2010 15:30:18 GMT'],

'Server': ['Apache/2.2.3 (CentOS)']}

view¶

- 语法:

scrapy view <url> - 需要项目:需要

在浏览器中打开给定的URL,就像Scrapy Spider“看到”的那样。 有时候,Spider看到的网页与普通用户不同,所以这可以用来检查Spider“看到”了什么,并确认它是否是你期望的。

支持的选项:

--spider=SPIDER:绕过Spider自动检测并强制使用特定的Spider--no-redirect:不follow HTTP 3xx重定向(默认是follow它们)

用法示例:

$ scrapy view http://www.example.com/some/page.html

[ ... browser starts ... ]

shell¶

- 语法:

scrapy shell [url] - 需要项目:不需要

为指定的URL(如果给定)启动Scrapy shell,如果没有给出URL,则为空。 还支持UNIX风格的本地文件路径,./或../前缀的相对路径或绝对路径。

有关更多信息,请参阅Scrapy shell。

支持的选项:

--spider=SPIDER:绕过Spider自动检测并强制使用特定的Spider-c code:获取shell中的代码,打印结果并退出--no-redirect:不follow HTTP 3xx重定向(默认是follow它们);这只会影响您在命令行上作为参数传递的URL;在shell运行时,fetch(url)默认仍然会follow HTTP重定向。

用法示例:

$ scrapy shell http://www.example.com/some/page.html

[ ... scrapy shell starts ... ]

$ scrapy shell --nolog http://www.example.com/ -c '(response.status, response.url)'

(200, 'http://www.example.com/')

# shell follows HTTP redirects by default

$ scrapy shell --nolog http://httpbin.org/redirect-to?url=http%3A%2F%2Fexample.com%2F -c '(response.status, response.url)'

(200, 'http://example.com/')

# you can disable this with --no-redirect

# (only for the URL passed as command line argument)

$ scrapy shell --no-redirect --nolog http://httpbin.org/redirect-to?url=http%3A%2F%2Fexample.com%2F -c '(response.status, response.url)'

(302, 'http://httpbin.org/redirect-to?url=http%3A%2F%2Fexample.com%2F')

parse¶

- 语法:

scrapy parse <url> [options] - 需要项目:需要

获取给定的URL,Spider使用--callback选项传递的方法或parse处理它。

支持的选项:

--spider=SPIDER:绕过spider自动检测并强制使用特定的spider--a NAME=VALUE:设置spider参数(可以重复)--callback或-c:用作spider解析响应的回调方法--meta或-m:通过回调请求传回附加请求元标签。 这必须是有效的json字符串。 例如:-meta ='{“foo”:“bar”}'--pipelines:通过pipeline处理item--rules或-r:使用CrawlSpider规则来发现用于解析响应的回调(即spider方法)--noitems:不显示被抓到的item--nolinks:不显示提取的链接--nocolour:避免使用pygments对输出着色--depth或-d:递归请求后的深度级别(默认值:1)--verbose或-v:显示每个深度级别的信息

用法示例:

$ scrapy parse http://www.example.com/ -c parse_item

[ ... scrapy log lines crawling example.com spider ... ]

>>> STATUS DEPTH LEVEL 1 <<<

# Scraped Items ------------------------------------------------------------

[{'name': u'Example item',

'category': u'Furniture',

'length': u'12 cm'}]

# Requests -----------------------------------------------------------------

[]

settings¶

- 语法:

scrapy settings [options] - 需要项目:需要

获取Scrapy设置值。

如果在项目中使用,它将显示项目设置值,否则将显示默认Scrapy设置值。

用法示例:

$ scrapy settings --get BOT_NAME

scrapybot

$ scrapy settings --get DOWNLOAD_DELAY

0

runspider¶

- 语法:

scrapy runspider <spider_file.py> - 需要项目:不需要

运行一个包含在Python文件中的Spider,而不必创建一个项目。

用法示例:

$ scrapy runspider myspider.py

[ ... spider starts crawling ... ]

version¶

- 语法:

scrapy version [-v] - 需要项目:需要

打印Scrapy版本。 如果与-v一起使用,它还会打印Python,Twisted和Platform信息,这对于错误报告很有用。

自定义项目命令¶

您还可以使用COMMANDS_MODULE设置来添加自定义项目命令。 有关如何实现自定义命令的示例,请参阅scrapy/commands中的Scrapy命令。

COMMANDS_MODULE¶

默认:''(空字符串)

用于查找自定义Scrapy命令的模块。 这用于为您的Scrapy项目添加自定义命令。

例:

COMMANDS_MODULE = 'mybot.commands'

通过setup.py入口点注册命令¶

注意

这是一个实验性功能,请谨慎使用。

您还可以通过在setup.py库文件的入口点添加scrapy.commands部分,从外部库添加Scrapy命令。

以下示例添加了my_command命令:

from setuptools import setup, find_packages

setup(name='scrapy-mymodule',

entry_points={

'scrapy.commands': [

'my_command=my_scrapy_module.commands:MyCommand',

],

},

)

Spiders¶

Spider是定义了如何抓取某个站点(或一组站点)的类,包括如何执行爬取(即follow链接)以及如何从其页面中提取结构化数据(即抓取item)。 换句话说,Spider是您定义的为抓取和解析特定网站(在某些情况下是一组网站)页面的自定义行为的地方。

对于蜘蛛来说,抓取周期会经历这样一些事情:

首先生成抓取第一个URL的初始请求,为这些请求下载的响应来调用指定回调函数。

通过调用

start_requests()方法来获得第一个执行请求,该方法默认为start_urls中指定的URL生成Request,parse方法作为请求的回调函数。在回调函数中,解析响应(网页)并返回带有提取数据的字典,

Item对象,Request对象或这些对象的迭代。 这些请求还将包含一个回调(可能是相同的),然后由Scrapy下载,通过指定的回调处理它们的响应。在回调函数中,通常使用Selectors(您也可以使用BeautifulSoup,lxml或您喜欢的任何机制)解析页面内容,并使用解析的数据生成Item。

最后,从spider返回的Item通常会被持久化到数据库(在某些Item Pipeline中)或使用Feed exports写入文件。

尽管这个周期适用于(或多或少)任何类型的Spider,但为了不同的目的,有不同类型的默认Spider捆绑到Scrapy中。 我们将在这里讨论这些类型。

scrapy.Spider¶

-

class

scrapy.spiders.Spider¶ 这是最简单的Spider,也是其他Spider必须继承的(包括与Scrapy捆绑在一起的Spider,以及自己写的Spider)。 它不提供任何特殊功能。 它只是提供一个默认的

start_requests()实现,从start_urlsspider属性发送请求,并为每个结果响应调用spider的parse方法。-

name¶ 一个字符串,它定义了这个Spider的名字。 Scrapy通过Spider名称定位(并实例化)Spider,因此它必须是唯一的。 然而,没有什么能够阻止你实例化同一个蜘蛛的多个实例。 这是最重要的Spider属性,它是必需的。

如果Spider爬取单独一个域名,通常的做法是使用域名命名Spider,无论是否使用TLD。 因此,例如,抓取

mywebsite.com的Spider通常会被命名为mywebsite。注意

在Python 2中,这只能是ASCII。

-

allowed_domains¶ 包含允许Spider抓取域的可选字符串列表。 如果启用了

OffsiteMiddleware,则不会follow不属于此列表中指定的域名(或其子域)的URL的请求。假设您的目标网址为

https://www.example.com/1.html,然后将'example.com'添加到列表中。

-

start_urls¶ 当没有特别指定的网址时,Spider将从哪个网址开始抓取的网址列表。 所以,下载的第一个页面将在这里列出。 随后的URL将从包含在起始URL中的数据中连续生成。

-

crawler¶ 该属性在初始化类后由

from_crawler()类方法设置,并链接到此spider实例绑定的Crawler对象。Crawler在项目中封装了大量组件,以便进行单一入口访问(例如扩展,中间件,信号管理器等)。 请参阅Crawler API以了解更多关于它们的信息。

-

from_crawler(crawler, *args, **kwargs)¶ 这是Scrapy用来创建Spider的类方法。

您可能不需要直接覆盖它,因为它默认实现是充当

__init__()方法的代理,用给定参数args和命名参数 kwargs 。尽管如此,该方法会在新实例中设置

crawler和settings属性,以便稍后可以在Spider代码中访问它们。Parameters:

-

start_requests()¶ 此方法必须为Spider返回可迭代的初始请求。 当Spider开始抓取时它会被Scrapy调用。 Scrapy只调用它一次,所以将

start_requests()作为发生器是安全的。默认实现为

start_urls中的每个网址生成Request(url, dont_filter=True)。如果您想更改用于开始抓取域的请求,需要覆盖此方法。 例如,如果您需要使用POST请求登录,则可以执行以下操作:

class MySpider(scrapy.Spider): name = 'myspider' def start_requests(self): return [scrapy.FormRequest("http://www.example.com/login", formdata={'user': 'john', 'pass': 'secret'}, callback=self.logged_in)] def logged_in(self, response): # 在这里你用另外一个回调函数提取follow的链接 # 并返回每个请求 pass

-

parse(response)¶ 这是Scrapy用来处理下载响应的默认回调,当请求没有指定回调时。

parse方法负责处理响应并返回抓取的数据和/或更多的URL。 其他请求回调与Spider类具有相同的要求。该方法以及任何其他Request回调一样,必须返回一个可迭代的

Request和/或字典或Item对象。Parameters: response ( response) - 解析的响应

-

log(message[, level, component])¶ 通过Spider的

logger发送日志消息的包装器,保持向后兼容性。 有关更多信息,请参阅Spider日志记录。

-

closed(reason)¶ 当Spider关闭时调用。 此方法为

spider_closed信号提供了signals.connect()的快捷方式。

-

我们来看一个例子:

import scrapy

class MySpider(scrapy.Spider):

name = 'example.com'

allowed_domains = ['example.com']

start_urls = [

'http://www.example.com/1.html',

'http://www.example.com/2.html',

'http://www.example.com/3.html',

]

def parse(self, response):

self.logger.info('A response from %s just arrived!', response.url)

从单个回调中返回多个请求和Item:

import scrapy

class MySpider(scrapy.Spider):

name = 'example.com'

allowed_domains = ['example.com']

start_urls = [

'http://www.example.com/1.html',

'http://www.example.com/2.html',

'http://www.example.com/3.html',

]

def parse(self, response):

for h3 in response.xpath('//h3').extract():

yield {"title": h3}

for url in response.xpath('//a/@href').extract():

yield scrapy.Request(url, callback=self.parse)

您可以直接使用start_requests()来代替start_urls; 如果想为数据提供更多结构,您可以使用Item:

import scrapy

from myproject.items import MyItem

class MySpider(scrapy.Spider):

name = 'example.com'

allowed_domains = ['example.com']

def start_requests(self):

yield scrapy.Request('http://www.example.com/1.html', self.parse)

yield scrapy.Request('http://www.example.com/2.html', self.parse)

yield scrapy.Request('http://www.example.com/3.html', self.parse)

def parse(self, response):

for h3 in response.xpath('//h3').extract():

yield MyItem(title=h3)

for url in response.xpath('//a/@href').extract():

yield scrapy.Request(url, callback=self.parse)

Spider参数¶

Spider可以接收修改其行为的参数。 Spider参数的一些常见用途是定义起始URL或将爬虫限制到站点的某些部分,但它们可用于配置Spider的任何功能。

使用crawl命令-a选项传递Spider参数。 例如:

scrapy crawl myspider -a category=electronics

Spider可以在它的__init__方法中访问参数:

import scrapy

class MySpider(scrapy.Spider):

name = 'myspider'

def __init__(self, category=None, *args, **kwargs):

super(MySpider, self).__init__(*args, **kwargs)

self.start_urls = ['http://www.example.com/categories/%s' % category]

# ...

默认的__init__方法将获取所有Spider参数并将其作为属性复制到Spider中。 上面的例子也可以写成如下:

import scrapy

class MySpider(scrapy.Spider):

name = 'myspider'

def start_requests(self):

yield scrapy.Request('http://www.example.com/categories/%s' % self.category)

请注意,Spider参数只能是字符串。 Spider不会自行解析。 如果要从命令行设置start_urls属性,则必须使用类似ast.literal_eval或json.loads的方式将它解析为列表然后将其设置为属性。 否则,将导致迭代start_urls字符串(一个非常常见的python陷阱),使每个字符被视为一个单独的url。

有效的用例是设置由HttpAuthMiddleware使用的http认证凭证或由UserAgentMiddleware使用的用户代理:

scrapy crawl myspider -a http_user=myuser -a http_pass=mypassword -a user_agent=mybot

Spider参数也可以通过Scrapyd的schedule.json API传递。

参见Scrapyd文档。

通用Spider¶

Scrapy附带了一些有用的通用Spider,您可以继承它们。 它们的目标是为一些常见的抓取案例提供方便的功能,例如以特定规则follow网站上的所有链接,从Sitemaps抓取或解析XML/CSV feed。

对于以下蜘蛛中使用的示例,我们假设您有一个项目,其中包含在myproject.items模块中声明的TestItem:

import scrapy

class TestItem(scrapy.Item):

id = scrapy.Field()

name = scrapy.Field()

description = scrapy.Field()

CrawlSpider¶

-

class

scrapy.spiders.CrawlSpider¶ 这是抓取常规网站最常用的Spider,因为它提供了一个通过定义一组规则来follow链接的便捷机制。 它可能不是最适合您的特定网站或项目的,但它对于多种情况是足够通用的,所以您可以从它开始并根据需要覆盖它以获得更多自定义功能,或者只是实现您自己的Spider。

除了从Spider继承的属性(必须指定)之外,该类还支持一个新的属性:

这个Spider也暴露了一个可覆盖的方法:

抓取规则¶

-

class

scrapy.spiders.Rule(link_extractor, callback=None, cb_kwargs=None, follow=None, process_links=None, process_request=None)¶ link_extractor是一个链接提取器对象,它定义了如何从每个已爬网页中提取链接。callback是可调用的或字符串(在这种情况下,将使用具有该名称的Spider对象的方法)被使用指定的link_extractor提取的链接调用。 这个回调接收一个响应作为它的第一个参数,并且必须返回一个包含Item和/或Request对象(或者它们的任何子类)的列表。警告

编写爬虫rule时,避免使用

parse作为回调,因为CrawlSpider使用parse方法来实现其逻辑。 因此,如果您重写parse方法,抓取Spider将不再起作用。cb_kwargs是一个包含要传递给回调函数的关键字参数字典。follow是一个布尔值,它指定是否应该使用此规则提取的每个响应follow链接。 如果callback为None,follow默认为True,否则follow默认为False。process_links是可调用的或字符串(在这种情况下,将使用具有该名称的spider对象的方法),将被使用指定的link_extractor的响应提取到的链接调用。 这主要用于过滤目的。process_request是一个可调用的或一个字符串(在这种情况下,将使用具有该名称的spider对象的方法),这个方法将被该规则提取的每个请求调用,并且必须返回一个请求或None(用于过滤请求)。

CrawlSpider示例¶

现在我们来看一个带有规则的示例CrawlSpider:

import scrapy

from scrapy.spiders import CrawlSpider, Rule

from scrapy.linkextractors import LinkExtractor

class MySpider(CrawlSpider):

name = 'example.com'

allowed_domains = ['example.com']

start_urls = ['http://www.example.com']

rules = (

# 提取匹配'category.php'的链接(但不匹配'subsection.php')

# 然后follow这些链接(因为没有callback参数意味着默认follow=True)

Rule(LinkExtractor(allow=('category\.php', ), deny=('subsection\.php', ))),

# 提取匹配'item.php'的链接,然后用Spider的parse_item解析它们

Rule(LinkExtractor(allow=('item\.php', )), callback='parse_item'),

)

def parse_item(self, response):

self.logger.info('Hi, this is an item page! %s', response.url)

item = scrapy.Item()

item['id'] = response.xpath('//td[@id="item_id"]/text()').re(r'ID: (\d+)')

item['name'] = response.xpath('//td[@id="item_name"]/text()').extract()

item['description'] = response.xpath('//td[@id="item_description"]/text()').extract()

return item

这个Spider将开始抓取example.com的主页,收集category链接和item链接,用parse_item方法解析后者。 对于每个Item响应,将使用XPath从HTML中提取一些数据填充Item。

XMLFeedSpider¶

-

class

scrapy.spiders.XMLFeedSpider¶ XMLFeedSpider旨在通过遍历特定节点名称来解析XML提要。 迭代器可以从

iternodes,xml和html中选择。 出于性能原因,建议使用iternodes迭代器,因为xml和html迭代器会一次生成整个DOM以解析它。 但是,使用html作为迭代器时,可能会在解析具有错误标记的XML时很有用。要设置迭代器和标签名称,您必须定义以下类属性:

-

iterator¶ 一个定义要使用的迭代器的字符串。 它可以是:

默认为:

'iternodes'。

-

itertag¶ 一个字符串,其中包含要迭代的节点(或元素)的名称。 例:

itertag = 'product'

-

namespaces¶ 定义spider将要处理的文档中可用命名空间的

(prefix, uri)元组列表。prefix和uri将用于使用register_namespace()方法自动注册名称空间。然后可以在

itertag属性中指定具有名称空间的节点。例:

class YourSpider(XMLFeedSpider): namespaces = [('n', 'http://www.sitemaps.org/schemas/sitemap/0.9')] itertag = 'n:url' # ...

除了这些新的属性外,这个Spider还有以下可覆盖的方法:

-

adapt_response(response)¶ 在Spider开始分析它之前,一旦收到响应就传到Spider中间件中。 它可以用来在解析响应之前修改响应主体。 这个方法接收一个响应,并返回一个响应(它可能是相同的或另一个)。

-

parse_node(response, selector)¶ 对于与提供的标签名称匹配的节点(

itertag),将调用此方法。 接收每个节点的响应和Selector。 覆盖此方法是强制性的。 否则,你的Spider将无法工作。 该方法必须返回一个Item对象或一个Request对象或包含它们的迭代器。

-

process_results(response, results)¶ 该方法针对Spider所返回的每个结果(Item或请求)进行调用,并且它在将结果返回给框架核心之前执行所需的最后一次处理,例如设置Item ID。 它接收结果列表和这些结果的源响应。 它必须返回结果列表(Item或请求)。

-

XMLFeedSpider示例¶

这些Spider很容易使用,让我们来看一个例子:

from scrapy.spiders import XMLFeedSpider

from myproject.items import TestItem

class MySpider(XMLFeedSpider):

name = 'example.com'

allowed_domains = ['example.com']

start_urls = ['http://www.example.com/feed.xml']

iterator = 'iternodes' # 实际上这个是非必须的,因为iternodes是默认值

itertag = 'item'

def parse_node(self, response, node):

self.logger.info('Hi, this is a <%s> node!: %s', self.itertag, ''.join(node.extract()))

item = TestItem()

item['id'] = node.xpath('@id').extract()

item['name'] = node.xpath('name').extract()

item['description'] = node.xpath('description').extract()

return item

基本上我们做的是创建一个Spider,它从给定的start_urls下载一个feed,然后迭代它的每个item标签,打印出来并存储一些随机数据到Item中。

CSVFeedSpider¶

-

class

scrapy.spiders.CSVFeedSpider¶ 这个Spider与XMLFeedSpider非常相似,只是它遍历行而不是节点。 每次迭代调用的方法是

parse_row()。-

delimiter¶ 包含CSV文件中每个字段的分隔符的字符串,默认为

','(逗号)。

-

quotechar¶ 包含CSV文件中每个字段的外围字符的字符串,默认为

'“'(引号)。

-

headers¶ CSV文件中列名称的列表。

-

parse_row(response, row)¶ 接收一个响应和一个以被提供(或被检测)的CSV文件头作为关键字的字典(代表每行)。 这个Spider还有机会覆盖用于预处理和后置处理目的的

adapt_response和process_results方法。

-

CSVFeedSpider示例¶

我们来看一个与前一个类似的例子,但是使用CSVFeedSpider:

from scrapy.spiders import CSVFeedSpider

from myproject.items import TestItem

class MySpider(CSVFeedSpider):

name = 'example.com'

allowed_domains = ['example.com']

start_urls = ['http://www.example.com/feed.csv']

delimiter = ';'

quotechar = "'"

headers = ['id', 'name', 'description']

def parse_row(self, response, row):

self.logger.info('Hi, this is a row!: %r', row)

item = TestItem()

item['id'] = row['id']

item['name'] = row['name']

item['description'] = row['description']

return item

SitemapSpider¶

-

class

scrapy.spiders.SitemapSpider¶ SitemapSpider允许您通过使用Sitemaps发现网址来抓取网站。

它支持嵌套站点地图并从robots.txt中发现站点地图网址。

-

sitemap_urls¶ 指向您要抓取的网址的站点地图的网址列表。

您也可以指向一个robots.txt,将其解析并从中提取站点地图网址。

-

sitemap_rules¶ 一个元组列表

(regex, callback),其中:regex是一个正则表达式,用于匹配从站点地图提取的网址。regex可以是字符串或编译的正则表达式对象。- callback 是用于处理匹配正则表达式的url的回调。

callback可以是一个字符串(指定spider的方法名)或可调用的。

例如:

sitemap_rules = [('/product/', 'parse_product')]规则按顺序应用,只有第一个匹配的将被使用。

如果你省略了这个属性,所有在站点地图中找到的URL都会用

parse回调进行处理。

-

sitemap_alternate_links¶ 指定是否应该follow一个

url的备用链接。 这些是在同一个url块中传递的另一种语言的同一网站的链接。例如:

<url> <loc>http://example.com/</loc> <xhtml:link rel="alternate" hreflang="de" href="http://example.com/de"/> </url>

通过设置

sitemap_alternate_links,将检索两个网址。 禁用sitemap_alternate_links时,只会检索http://example.com/。sitemap_alternate_links默认禁用。

-

SitemapSpider示例¶

最简单的例子:使用parse回调处理通过站点地图发现的所有网址:

from scrapy.spiders import SitemapSpider

class MySpider(SitemapSpider):

sitemap_urls = ['http://www.example.com/sitemap.xml']

def parse(self, response):

pass # ... scrape item here ...

使用指定的回调处理某些URL,对其他URL使用不同的回调:

from scrapy.spiders import SitemapSpider

class MySpider(SitemapSpider):

sitemap_urls = ['http://www.example.com/sitemap.xml']

sitemap_rules = [

('/product/', 'parse_product'),

('/category/', 'parse_category'),

]

def parse_product(self, response):

pass # ... scrape product ...

def parse_category(self, response):

pass # ... scrape category ...

followrobots.txt文件中定义的站点地图,并且只follow链接中包含/ sitemap_shop的站点地图:

from scrapy.spiders import SitemapSpider

class MySpider(SitemapSpider):

sitemap_urls = ['http://www.example.com/robots.txt']

sitemap_rules = [

('/shop/', 'parse_shop'),

]

sitemap_follow = ['/sitemap_shops']

def parse_shop(self, response):

pass # ... scrape shop here ...

将SitemapSpider与其他网址来源相结合:

from scrapy.spiders import SitemapSpider

class MySpider(SitemapSpider):

sitemap_urls = ['http://www.example.com/robots.txt']

sitemap_rules = [

('/shop/', 'parse_shop'),

]

other_urls = ['http://www.example.com/about']

def start_requests(self):

requests = list(super(MySpider, self).start_requests())

requests += [scrapy.Request(x, self.parse_other) for x in self.other_urls]

return requests

def parse_shop(self, response):

pass # ... scrape shop here ...

def parse_other(self, response):

pass # ... scrape other here ...

选择器¶

在抓取网页时,需要执行的最常见任务是从HTML源中提取数据。 有几个库可以实现这一点:

- BeautifulSoup是Python程序员中非常流行的网络抓取库,它基于HTML代码的结构构建Python对象,还能适当地处理损坏的标记,但它有一个缺点:速度很慢。

- lxml是一个基于ElementTree的pythonic API的XML解析库(它也解析HTML)。 (lxml不是Python标准库的一部分。)

Scrapy自带提取数据的机制。 它们被称为选择器,因为它们“选择”由XPath或CSS表达式指定的HTML文档的某些部分。

XPath是用于选择XML文档中的节点的语言,也可以用于HTML。 CSS是一种将样式应用于HTML文档的语言。 它定义选择器将这些样式与特定的HTML元素相关联。

Scrapy选择器是建立在lxml库上的,这意味着它们在速度和解析精度上非常相似。

这个页面解释了选择器是如何工作的,并描述了它们小且简单的API,不像lxml API那样大,因为lxml库除了选择标记文件还可以用于许多其他任务,。

有关选择器API的完整参考,请参阅选择器参考

使用选择器¶

构造选择器¶

Scrapy选择器是被构造用来传递text或TextResponse对象的Selector类的实例。 它会根据输入类型(XML vs HTML)自动选择最佳的解析规则:

>>> from scrapy.selector import Selector

>>> from scrapy.http import HtmlResponse

从文本构建:

>>> body = '<html><body><span>good</span></body></html>'

>>> Selector(text=body).xpath('//span/text()').extract()

[u'good']

从响应构建:

>>> response = HtmlResponse(url='http://example.com', body=body)

>>> Selector(response=response).xpath('//span/text()').extract()

[u'good']

为方便起见,响应对象在.selector属性上显示选择器,完全可以在可能的情况下使用此快捷方式:

>>> response.selector.xpath('//span/text()').extract()

[u'good']

使用选择器¶

为了解释如何使用选择器,我们将使用Scrapy文档服务器中的Scrapy shell(提供交互式测试)和一个示例页面:

这是它的HTML代码:

<html>

<head>

<base href='http://example.com/' />

<title>Example website</title>

</head>

<body>

<div id='images'>

<a href='image1.html'>Name: My image 1 <br /><img src='image1_thumb.jpg' /></a>

<a href='image2.html'>Name: My image 2 <br /><img src='image2_thumb.jpg' /></a>

<a href='image3.html'>Name: My image 3 <br /><img src='image3_thumb.jpg' /></a>

<a href='image4.html'>Name: My image 4 <br /><img src='image4_thumb.jpg' /></a>

<a href='image5.html'>Name: My image 5 <br /><img src='image5_thumb.jpg' /></a>

</div>

</body>

</html>

首先,我们打开shell:

scrapy shell https://doc.scrapy.org/en/latest/_static/selectors-sample1.html

然后,在加载shell之后,您得到的响应将成为response shell变量,响应附加的选择器为response.selector属性。

由于我们正在处理HTML,选择器将自动使用HTML解析器。

因此,通过查看该页面的HTML代码,我们构建一个用于选择标题标签内的文本的XPath:

>>> response.selector.xpath('//title/text()')

[<Selector (text) xpath=//title/text()>]

使用XPath和CSS查询响应非常常见,以至于响应包含两个快捷途径:response.xpath()和response.css():

>>> response.xpath('//title/text()')

[<Selector (text) xpath=//title/text()>]

>>> response.css('title::text')

[<Selector (text) xpath=//title/text()>]

如您所见,.xpath()和.css()方法返回一个SelectorList实例,该实例是新选择器的列表。 该API可用于快速选择嵌套数据:

>>> response.css('img').xpath('@src').extract()

[u'image1_thumb.jpg',

u'image2_thumb.jpg',

u'image3_thumb.jpg',

u'image4_thumb.jpg',

u'image5_thumb.jpg']

要实际提取文本数据,您必须调用选择器.extract()方法,如下所示:

>>> response.xpath('//title/text()').extract()

[u'Example website']

如果你只想提取第一个匹配的元素,你可以调用选择器.extract_first()

>>> response.xpath('//div[@id="images"]/a/text()').extract_first()

u'Name: My image 1 '

如果找不到元素,它将返回None:

>>> response.xpath('//div[@id="not-exists"]/text()').extract_first() is None

True

默认返回值可以作为参数提供,用来代替None:

>>> response.xpath('//div[@id="not-exists"]/text()').extract_first(default='not-found')

'not-found'

请注意,CSS选择器可以使用CSS3伪元素选择文本或属性节点:

>>> response.css('title::text').extract()

[u'Example website']

现在我们要获取基本URL和一些图像链接:

>>> response.xpath('//base/@href').extract()

[u'http://example.com/']

>>> response.css('base::attr(href)').extract()

[u'http://example.com/']

>>> response.xpath('//a[contains(@href, "image")]/@href').extract()

[u'image1.html',

u'image2.html',

u'image3.html',

u'image4.html',

u'image5.html']

>>> response.css('a[href*=image]::attr(href)').extract()

[u'image1.html',

u'image2.html',

u'image3.html',

u'image4.html',

u'image5.html']

>>> response.xpath('//a[contains(@href, "image")]/img/@src').extract()

[u'image1_thumb.jpg',

u'image2_thumb.jpg',

u'image3_thumb.jpg',

u'image4_thumb.jpg',

u'image5_thumb.jpg']

>>> response.css('a[href*=image] img::attr(src)').extract()

[u'image1_thumb.jpg',

u'image2_thumb.jpg',

u'image3_thumb.jpg',

u'image4_thumb.jpg',

u'image5_thumb.jpg']

嵌套选择器¶

选择方法(.xpath()或.css())会返回相同类型的选择器列表,因此您也可以对这些选择器调用选择方法。 这是一个例子:

>>> links = response.xpath('//a[contains(@href, "image")]')

>>> links.extract()

[u'<a href="image1.html">Name: My image 1 <br><img src="image1_thumb.jpg"></a>',

u'<a href="image2.html">Name: My image 2 <br><img src="image2_thumb.jpg"></a>',

u'<a href="image3.html">Name: My image 3 <br><img src="image3_thumb.jpg"></a>',

u'<a href="image4.html">Name: My image 4 <br><img src="image4_thumb.jpg"></a>',

u'<a href="image5.html">Name: My image 5 <br><img src="image5_thumb.jpg"></a>']

>>> for index, link in enumerate(links):

... args = (index, link.xpath('@href').extract(), link.xpath('img/@src').extract())

... print 'Link number %d points to url %s and image %s' % args

Link number 0 points to url [u'image1.html'] and image [u'image1_thumb.jpg']

Link number 1 points to url [u'image2.html'] and image [u'image2_thumb.jpg']

Link number 2 points to url [u'image3.html'] and image [u'image3_thumb.jpg']

Link number 3 points to url [u'image4.html'] and image [u'image4_thumb.jpg']

Link number 4 points to url [u'image5.html'] and image [u'image5_thumb.jpg']

使用具有正则表达式的选择器¶

Selector也有一个使用正则表达式提取数据的.re()方法。 然而,与.xpath()或.css()方法不同,.re()返回unicode字符串列表。 所以你不能构造嵌套的.re()调用。

以下是一个用于从HTML代码中提取图像名称的示例:

>>> response.xpath('//a[contains(@href, "image")]/text()').re(r'Name:\s*(.*)')

[u'My image 1',

u'My image 2',

u'My image 3',

u'My image 4',

u'My image 5']

.re()有一个额外的类似.extract_first()辅助,名为.re_first()。 使用它只提取第一个匹配的字符串:

>>> response.xpath('//a[contains(@href, "image")]/text()').re_first(r'Name:\s*(.*)')

u'My image 1'

使用相对XPaths ¶

请记住,如果您正在嵌套选择器并使用以/开头的XPath,则该XPath对文档是绝对的,而不是相对于它来自的Selector。

例如,假设你想提取<div>中所有的<p> 元素。 首先,你获取所有的<div>元素:

>>> divs = response.xpath('//div')

起初,您可能会尝试使用以下方法,这是错误的,因为它实际上会提取文件中所有<p>,不只是<div>元素中的。

>>> for p in divs.xpath('//p'): # 这是错的 - 获取整个文件的<p>

... print p.extract()

这是做到这一点的正确方法(注意.//p XPath的点前缀):

>>> for p in divs.xpath('.//p'): # 提取内部所有的<p>

... print p.extract()

另一个常见的情况是提取所有直接的<p>子节点:

>>> for p in divs.xpath('p'):

... print p.extract()

有关相对XPath的更多详细信息,请参阅XPath规范中的位置路径部分。

XPath表达式中的变量¶

XPath允许使用$ somevariable语法引用XPath表达式中的变量。 这有些类似于SQL中的参数化查询或预先声明,您可以用占位符替换查询中的某些参数像是 ?,然后用查询传递的值替换它们。

下面是一个基于其“id”属性值匹配元素的示例,不用对其进行硬编码(预先给定):

>>> # `$val` 用在表达式中, `val`参数需要被传递

>>> response.xpath('//div[@id=$val]/a/text()', val='images').extract_first()

u'Name: My image 1 '

这里有另一个例子,找到有5个<a>子元素的<div>元素的“id”属性(在这里我们传递一个整数值5):

>>> response.xpath('//div[count(a)=$cnt]/@id', cnt=5).extract_first()

u'images'

调用.xpath()时,所有变量引用都必须具有绑定值(否则您将得到 ValueError: XPath error: 异常)。

这是通过根据需要传递许多命名参数来完成的。

使用EXSLT扩展名¶

构建在lxml之上,Scrapy选择器还支持一些EXSLT扩展,并附带这些预先注册的名称空间以用于XPath表达式中:

| prefix | namespace | usage |

|---|---|---|

| re | http://exslt.org/regular-expressions | regular expressions |

| set | http://exslt.org/sets | set manipulation |

正则表达式¶

例如,当XPath的starts-with()或contains()功能不足时,test()函数可能非常有用。

使用以数字结尾的“class”属性选择列表项中链接的示例:

>>> from scrapy import Selector

>>> doc = """

... <div>

... <ul>

... <li class="item-0"><a href="link1.html">first item</a></li>

... <li class="item-1"><a href="link2.html">second item</a></li>

... <li class="item-inactive"><a href="link3.html">third item</a></li>

... <li class="item-1"><a href="link4.html">fourth item</a></li>

... <li class="item-0"><a href="link5.html">fifth item</a></li>

... </ul>

... </div>

... """

>>> sel = Selector(text=doc, type="html")

>>> sel.xpath('//li//@href').extract()

[u'link1.html', u'link2.html', u'link3.html', u'link4.html', u'link5.html']

>>> sel.xpath('//li[re:test(@class, "item-\d$")]//@href').extract()

[u'link1.html', u'link2.html', u'link4.html', u'link5.html']

>>>

警告

C语言库libxslt本身不支持EXSLT正则表达式,所以lxml实现时对Python的re模块使用了钩子。

因此,在XPath表达式中使用正则表达式函数可能会增加一点性能损失。

设置操作¶

例如,在提取文本元素之前,这些操作可以方便地排除文档树的部分内容。

使用itemscopes组和相应的itemprops提取微数据(从http://schema.org/Product取得的样本内容)示例:

>>> doc = """

... <div itemscope itemtype="http://schema.org/Product">

... <span itemprop="name">Kenmore White 17" Microwave</span>

... <img src="kenmore-microwave-17in.jpg" alt='Kenmore 17" Microwave' />

... <div itemprop="aggregateRating"

... itemscope itemtype="http://schema.org/AggregateRating">

... Rated <span itemprop="ratingValue">3.5</span>/5

... based on <span itemprop="reviewCount">11</span> customer reviews

... </div>

...

... <div itemprop="offers" itemscope itemtype="http://schema.org/Offer">

... <span itemprop="price">$55.00</span>

... <link itemprop="availability" href="http://schema.org/InStock" />In stock

... </div>

...

... Product description:

... <span itemprop="description">0.7 cubic feet countertop microwave.

... Has six preset cooking categories and convenience features like

... Add-A-Minute and Child Lock.</span>

...

... Customer reviews:

...

... <div itemprop="review" itemscope itemtype="http://schema.org/Review">

... <span itemprop="name">Not a happy camper</span> -

... by <span itemprop="author">Ellie</span>,

... <meta itemprop="datePublished" content="2011-04-01">April 1, 2011

... <div itemprop="reviewRating" itemscope itemtype="http://schema.org/Rating">

... <meta itemprop="worstRating" content = "1">

... <span itemprop="ratingValue">1</span>/

... <span itemprop="bestRating">5</span>stars

... </div>

... <span itemprop="description">The lamp burned out and now I have to replace

... it. </span>

... </div>

...

... <div itemprop="review" itemscope itemtype="http://schema.org/Review">

... <span itemprop="name">Value purchase</span> -

... by <span itemprop="author">Lucas</span>,

... <meta itemprop="datePublished" content="2011-03-25">March 25, 2011

... <div itemprop="reviewRating" itemscope itemtype="http://schema.org/Rating">

... <meta itemprop="worstRating" content = "1"/>

... <span itemprop="ratingValue">4</span>/

... <span itemprop="bestRating">5</span>stars

... </div>

... <span itemprop="description">Great microwave for the price. It is small and

... fits in my apartment.</span>

... </div>

... ...

... </div>

... """

>>> sel = Selector(text=doc, type="html")

>>> for scope in sel.xpath('//div[@itemscope]'):

... print "current scope:", scope.xpath('@itemtype').extract()

... props = scope.xpath('''

... set:difference(./descendant::*/@itemprop,

... .//*[@itemscope]/*/@itemprop)''')

... print " properties:", props.extract()

... print

current scope: [u'http://schema.org/Product']

properties: [u'name', u'aggregateRating', u'offers', u'description', u'review', u'review']

current scope: [u'http://schema.org/AggregateRating']

properties: [u'ratingValue', u'reviewCount']

current scope: [u'http://schema.org/Offer']

properties: [u'price', u'availability']

current scope: [u'http://schema.org/Review']

properties: [u'name', u'author', u'datePublished', u'reviewRating', u'description']

current scope: [u'http://schema.org/Rating']

properties: [u'worstRating', u'ratingValue', u'bestRating']

current scope: [u'http://schema.org/Review']

properties: [u'name', u'author', u'datePublished', u'reviewRating', u'description']

current scope: [u'http://schema.org/Rating']

properties: [u'worstRating', u'ratingValue', u'bestRating']

>>>

在这里,我们首先迭代itemscope元素,在每个元素中查找所有itemprops元素并排除那些位于另一个itemscope内的元素。

一些XPath提示¶

这里有一些在Scrapy选择器中使用XPath时可能会有用的提示,这些提示基于ScrapingHub博客的帖子。 如果您还不太熟悉XPath,那么您可能需要先看看这个XPath教程。

在条件中使用文本节点¶

当您需要将文本内容用作XPath字符串函数的参数时,请避免使用.//text()并仅使用 . 代替。

这是因为表达式.//text()会产生一组文本元素 - 节点集。

当一个节点集被转换成一个字符串,作为参数传递给一个字符串函数如contains()或starts-with()时,返回结果将是文本的第一个元素。

例:

>>> from scrapy import Selector

>>> sel = Selector(text='<a href="#">Click here to go to the <strong>Next Page</strong></a>')

将节点集转换为字符串:

>>> sel.xpath('//a//text()').extract() # take a peek at the node-set

[u'Click here to go to the ', u'Next Page']

>>> sel.xpath("string(//a[1]//text())").extract() # convert it to string

[u'Click here to go to the ']

然而,将一个节点转换为一个字符串,将会获得它自身加上所有后代的文本:

>>> sel.xpath("//a[1]").extract() # select the first node

[u'<a href="#">Click here to go to the <strong>Next Page</strong></a>']

>>> sel.xpath("string(//a[1])").extract() # convert it to string

[u'Click here to go to the Next Page']

因此,在这种情况下,使用.//text()节点集不会选择任何内容:

>>> sel.xpath("//a[contains(.//text(), 'Next Page')]").extract()

[]

但使用 . 代表节点是可行的:

>>> sel.xpath("//a[contains(., 'Next Page')]").extract()

[u'<a href="#">Click here to go to the <strong>Next Page</strong></a>']

注意//node[1]和(//node)[1]之间的区别¶

//node[1] 选择所有在它们各自父项下第一个节点。

(// node)[1]选择文档中的所有节点,然后仅获取它们中的第一个节点。

例:

>>> from scrapy import Selector

>>> sel = Selector(text="""

....: <ul class="list">

....: <li>1</li>

....: <li>2</li>

....: <li>3</li>

....: </ul>

....: <ul class="list">

....: <li>4</li>

....: <li>5</li>

....: <li>6</li>

....: </ul>""")

>>> xp = lambda x: sel.xpath(x).extract()

获取所有的第一个<li>,无论它们的父母是什么:

>>> xp("//li[1]")

[u'<li>1</li>', u'<li>4</li>']

获取整个文档的<li>元素:

>>> xp("(//li)[1]")

[u'<li>1</li>']

获取所有<ul>元素中的第一个<li>子元素:

>>> xp("//ul/li[1]")

[u'<li>1</li>', u'<li>4</li>']

这将得到整个文档中第一个在<ul>父元素中的<li>元素:

>>> xp("(//ul/li)[1]")

[u'<li>1</li>']

按类查询时,请考虑使用CSS ¶

由于一个元素可以包含多个CSS类,因此按类选择元素的XPath方法相当冗长:

*[contains(concat(' ', normalize-space(@class), ' '), ' someclass ')]

如果你使用@class='someclass',你最终可能会丢失具有其他类的元素,如果你只是使用contains(@class, 'someclass')来弥补这一点,那么当它们有不同的类名称来共享字符串someclass时,您可能会得到比你想要的更多的元素。

事实证明,Scrapy选择器允许您链接选择器,所以大多数情况下,您可以使用CSS按类选择,然后在需要时切换到XPath:

>>> from scrapy import Selector

>>> sel = Selector(text='<div class="hero shout"><time datetime="2014-07-23 19:00">Special date</time></div>')

>>> sel.css('.shout').xpath('./time/@datetime').extract()

[u'2014-07-23 19:00']

这比使用上面使用的冗长的XPath技巧更清晰。 记得使用 . 在随后的XPath表达式中。

内置选择器参考¶

选择器对象¶

-

class

scrapy.selector.Selector(response=None, text=None, type=None)¶ Selector的实例是选择对响应内容某一部分的封装。response是将被用于选择和提取数据的HtmlResponse或XmlResponse对象.text是unicode字符串或utf-8编码文本,当response不可用时使用。 同时使用text和response是未定义的行为。type定义了选择器类型,它可以是“html”,“xml”或None(默认)。如果

type是None,那么选择器将自动根据response类型选择最佳类型(请参见下文),如果与text一起使用则默认为“html “。如果

type为None且传递了response,则从响应类型推断选择器类型关系如下:"html"forHtmlResponsetype"xml"forXmlResponsetype"html"for anything else

否则,如果设置了

type,则选择器类型将被强制且不会进行检测。-

xpath(query)¶ 找到与xpath

query匹配的节点,并将结果作为带有所有展平元素的SelectorList实例返回. 列表元素也实现Selector接口。query是一个包含要应用的XPATH查询的字符串。注意

为方便起见,此方法可写成

response.xpath()

-

css(query)¶ 应用给定的CSS选择器并返回一个

SelectorList实例。query是一个包含要应用的CSS选择器的字符串。在后台,会使用cssselect库将CSS查询转换为XPath查询并运行

.xpath()方法。注意

为了方便起见,这个方法可以写成

response.css()

-

extract()¶ 序列化并返回匹配的节点作为unicode字符串列表。 Percent encoded content is unquoted.

-

re(regex)¶ 应用给定的正则表达式并返回匹配到的unicode字符串列表。

regex可以是编译的正则表达式,也可以是使用re.compile(egex)编译为正则表达式的字符串注意

请注意,

re()和re_first()都解码HTML实体(除了<和&)

-

remove_namespaces()¶ 删除所有名称空间,允许使用不含名称空间的xpaths来遍历文档。 见下面的例子。

SelectorList objects¶

-

class

scrapy.selector.SelectorList¶ SelectorList类是内置的list类的一个子类,它提供了一些额外的方法。-

xpath(query)¶ 为此列表中的每个元素调用

.xpath()方法,并将其结果展平为另一个SelectorList。query与Selector.xpath()中的参数相同

-

css(query)¶ 对此列表中每个元素调用

.css()方法,并将其结果展平为另一个SelectorList。query与Selector.css()中的参数相同

-

extract()¶ 为此列表中的每个元素调用

.extract()方法,并将其结果展平,作为unicode字符串列表。

-

re()¶ 对此列表中每个元素调用

.re()方法,并将其结果展平,作为unicode字符串列表。

-

HTML响应选择器示例¶

这里有几个Selector的例子用来说明几个概念。

在所有情况下,我们都假设已经有一个Selector用HtmlResponse对象实例化,如下所示:

sel = Selector(html_response)

从HTML响应主体中选择全部

<h1>元素,返回一个Selector对象列表(即 一个SelectorList对象):sel.xpath("//h1")

从HTML响应主体中提取所有

<h1>文本,返回一个Unicode字符串列表:sel.xpath("//h1").extract() # this includes the h1 tag sel.xpath("//h1/text()").extract() # this excludes the h1 tag

遍历所有

<p>标签,打印出他们的类属性:for node in sel.xpath("//p"): print node.xpath("@class").extract()

XML响应选择器示例¶

这里有几个例子来说明几个概念。 在这两种情况下,我们都假定已经有一个Selector用XmlResponse对象实例化,如下所示:

sel = Selector(xml_response)

从XML响应主体中选择全部

<product>元素,返回一个Selector对象列表,(即 一个SelectorList对象):sel.xpath("//product")

从Google Base XML Feed中提取所有的价格需要注册命名空间:

sel.register_namespace("g", "http://base.google.com/ns/1.0") sel.xpath("//g:price").extract()

删除命名空间¶

在处理抓取项目时,通常完全摆脱名称空间并仅使用元素名称来编写更简单/便捷的XPath非常方便。 您可以使用Selector.remove_namespaces()方法做到这点。

我们来看一个用GitHub博客atom feed来说明的例子。

首先,用我们想要抓取的url打开shell:

$ scrapy shell https://github.com/blog.atom

一旦进入shell,我们可以尝试选择所有的<link> 对象,发现它不工作(因为这个Atom XML命名空间使节点模糊)

>>> response.xpath("//link")

[]

但是一旦我们调用了Selector.remove_namespaces()方法,所有节点都可以直接通过它们的名字来访问:

>>> response.selector.remove_namespaces()

>>> response.xpath("//link")

[<Selector xpath='//link' data=u'<link xmlns="http://www.w3.org/2005/Atom'>,

<Selector xpath='//link' data=u'<link xmlns="http://www.w3.org/2005/Atom'>,

...

如果您想知道为什么命名空间删除过程并不总是被默认调用从而而不必手动调用它,这是因为两个原因,按照相关性顺序,这两个原因是:

- 删除名称空间需要迭代和修改文档中的所有节点,这对于Scrapy搜索的所有文档来说是相当昂贵的操作

- 在某些情况下,实际上需要使用名称空间,以防某些元素名称在名称空间之间发生冲突。 虽然这种情况非常罕见。

Items¶

抓取的主要目标是从非结构化来源(通常是网页)中提取结构化数据。 Scrapy Spider可以将提取的数据作为Python字典返回。 虽然方便且常见,但Python字典缺乏结构:很容易发生字段名拼写错误或返回不一致数据,尤其是在包含许多Spider的大型项目中。

为定义公共输出数据格式,Scrapy提供了Item类。

Item对象是用于收集抓取数据的简单容器。

它们提供了一个带有便捷语法的类似字典API,用于声明其可用字段。

各种Scrapy组件使用Items提供的额外信息:exporter查看已声明的字段以确定要导出的列,序列化可以使用Item字段元数据定制,trackref跟踪Item实例以帮助查找内存泄漏(请参阅使用trackref调试内存泄漏),等等。

声明Item¶

使用简单的类定义语法和Field对象声明Item。 这里是一个例子:

import scrapy

class Product(scrapy.Item):

name = scrapy.Field()

price = scrapy.Field()

stock = scrapy.Field()

last_updated = scrapy.Field(serializer=str)

注意

熟悉Django的人会注意到Scrapy Item的声明与Django Models相似,只是Scrapy项目更简单,因为没有不同字段类型的概念。

Item字段¶

Field对象用于为每个字段指定元数据。 例如,上例中所示的last_updated字段的串行器函数说明。

您可以为每个字段指定任何类型的元数据。 对Field对象接受的值没有限制。 出于同样的原因,没有所有可用元数据键的参考列表。 在Field对象中定义的每个键都可以由不同的组件使用,只有那些组件才知道它。 您也可以在您的项目中定义和使用任何其他Field键,以满足您的需要。 Field对象的主要目标是提供一种在一个地方定义所有字段元数据的方法。 通常,那些行为依赖于每个字段的组件使用特定的字段键来配置该行为。 您必须参考他们的文档以查看每个组件使用哪些元数据键。

请注意,用于声明Item的Field对象不会保留为类属性。 相反,它们可以通过Item.fields属性进行访问。

使用Item¶

这是使用上面声明的ProductItem展现对Item执行的常见任务的一些示例。 您会注意到API与dict API非常相似。

创建Item¶

>>> product = Product(name='Desktop PC', price=1000)

>>> print product

Product(name='Desktop PC', price=1000)

获取字段值¶

>>> product['name']

Desktop PC

>>> product.get('name')

Desktop PC

>>> product['price']

1000

>>> product['last_updated']

Traceback (most recent call last):

...

KeyError: 'last_updated'

>>> product.get('last_updated', 'not set')

not set

>>> product['lala'] # 获取未知字段

Traceback (most recent call last):

...

KeyError: 'lala'

>>> product.get('lala', 'unknown field')

'unknown field'

>>> 'name' in product # is name field populated?

True

>>> 'last_updated' in product # is last_updated populated?

False

>>> 'last_updated' in product.fields # is last_updated a declared field?

True

>>> 'lala' in product.fields # is lala a declared field?

False

设置字段值¶

>>> product['last_updated'] = 'today'

>>> product['last_updated']

today

>>> product['lala'] = 'test' # 设置未知字段

Traceback (most recent call last):

...

KeyError: 'Product does not support field: lala'

访问所有填充值¶

要访问所有填充值,只需使用典型的dict API:

>>> product.keys()

['price', 'name']

>>> product.items()

[('price', 1000), ('name', 'Desktop PC')]

其他常见任务¶

复制Item:

>>> product2 = Product(product)

>>> print product2

Product(name='Desktop PC', price=1000)

>>> product3 = product2.copy()

>>> print product3

Product(name='Desktop PC', price=1000)

从Item创建字典:

>>> dict(product) # 用所有填充值创建一个字典

{'price': 1000, 'name': 'Desktop PC'}

从字典创建Item:

>>> Product({'name': 'Laptop PC', 'price': 1500})

Product(price=1500, name='Laptop PC')

>>> Product({'name': 'Laptop PC', 'lala': 1500}) # 警告: 字典中未知字段

Traceback (most recent call last):

...

KeyError: 'Product does not support field: lala'

扩展Item¶

您可以通过声明原始Item的子类来扩展Item(以添加更多字段或更改某些字段的某些元数据)。

例如:

class DiscountedProduct(Product):

discount_percent = scrapy.Field(serializer=str)

discount_expiration_date = scrapy.Field()

您还可以对以前的字段元数据并附加更多值或更改现有值来扩展字段元数据,如下所示:

class SpecificProduct(Product):

name = scrapy.Field(Product.fields['name'], serializer=my_serializer)

它为name字段添加(或替换)serializer元数据键,保留所有先前存在的元数据值。

Item对象¶

Item加载器¶

Item加载器提供了一种便捷的机制来填充已被抓取的Items。 虽然Item可以使用自己的字典API来填充,Item加载器在抓取进程中提供了更便利的API填充它们,通过自动操作一些常见的任务类似在指定它之前解析原始数据。

换句话说,Items提供了抓取数据的容器,而Item Loaders提供了填充该容器的机制。

Item加载器旨在提供一种灵活,高效且简单的机制来扩展和覆盖不同的字段解析规则,无论是通过Spider还是通过源格式(HTML,XML等),不会成为维护的噩梦。

使用Item加载器填充Item¶

要使用Item加载器,你必须首先实例化它。 您可以使用类似字典的对象实例化它(例如 Item或dict)或者什么也不用,在这种情况下,Item会在Item Loader构造函数中使用ItemLoader.default_item_class属性中指定的Item类自动实例化。

然后,您开始将值收集到Item Loader中,通常使用选择器。 您可以将多个值添加到相同的Item字段; Item Loader将知道如何使用适当的处理函数“加入”这些值。

这是Spider中典型Item Loader用法,使用Item章节中声明的Product item:

from scrapy.loader import ItemLoader

from myproject.items import Product

def parse(self, response):

l = ItemLoader(item=Product(), response=response)

l.add_xpath('name', '//div[@class="product_name"]')

l.add_xpath('name', '//div[@class="product_title"]')

l.add_xpath('price', '//p[@id="price"]')

l.add_css('stock', 'p#stock]')

l.add_value('last_updated', 'today') # 你也可以使用文本值

return l.load_item()

通过快速查看代码,我们可以看到name字段是从页面中两个不同的XPath位置提取的:

//div[@class="product_name"]//div[@class="product_title"]

换句话说,通过使用add_xpath()方法从两个XPath位置提取来收集数据。 这是稍后将分配给name字段的数据。

之后,类似的调用用于price和stock字段(后者使用CSS选择器和add_css()方法),最后last_update字段使用不同的方法直接填充文本值(today):add_value()。

最后,当收集到所有数据时,将调用ItemLoader.load_item()方法,该方法实际上会返回填充了之前使用add_xpath(),add_css()和add_value()提取和收集的数据Item。

输入和输出处理器¶

Item加载器为每个(Item)字段提供了一个输入处理器和一个输出处理器。 输入处理器一收到(通过add_xpath(),add_css()或add_value()方法)提取的数据就立即处理,输入处理器的结果被收集并保存在ItemLoader中。 收集完所有数据后,调用ItemLoader.load_item()方法来填充并获取填充的Item对象。 这是在输出处理器被调用之前收集数据(并使用输入处理器处理)的情况。 输出处理器的结果是分配给Item的最终值。

我们来看一个例子来说明如何为指定字段调用输入和输出处理器(这同样适用于其他字段):

l = ItemLoader(Product(), some_selector)

l.add_xpath('name', xpath1) # (1)

l.add_xpath('name', xpath2) # (2)

l.add_css('name', css) # (3)

l.add_value('name', 'test') # (4)

return l.load_item() # (5)

将会发生:

- 来自

xpath1的数据被提取并通过name字段的输入处理器传递。 输入处理器的结果被收集并保存在Item Loader中(但尚未分配给Item)。 - 来自

xpath2的数据被提取,并通过(1)中使用的相同的输入处理器传递。 输入处理器的结果附加到(1)中收集的数据(如果有的话)。 - 除了使用CSS选择器从

css提取数据,这种情况与之前的类似,通过使用与(1)和(2)中相同输入处理器。 输入处理器的结果附加到(1)和(2)中收集的数据(如果有的话)。 - 这种情况也类似于以前的情况,不同之处在于要收集的值是直接分配的,而不是从XPath表达式或CSS选择器中提取。 但是,该值仍然通过输入处理器传递。 在这种情况下,由于该值不可迭代,在将其传递给输入处理器之前将其转换为单个元素的迭代器,因为输入处理器总是接收迭代器。

- 在步骤(1),(2),(3)和(4)中收集的数据通过

name字段的输出处理器传递。 输出处理器的结果被分配给Item中的name字段的值。

值得注意的是,处理器仅仅是可调用的对象,它们被调用以解析数据,并返回一个解析的值。 所以你可以使用任何方法作为输入或输出处理器。 唯一的要求是它们必须接受一个(且只有一个)位置参数,它将是一个迭代器。

注意

输入和输出处理器都必须接收迭代器作为它们的第一个参数。 这些函数的输出可以是任何东西。 输入处理器的结果将被附加到包含收集值(对于该字段)的内部列表(在加载器中)。 输出处理器的结果是最终将分配给该Item的值。

另外需要注意的是输入处理器返回的值在内部收集(以列表形式),然后传递给输出处理器以填充字段。

最后但同样重要的是,Scrapy附带了一些内置的常用处理器以方便使用。

声明Item加载器¶

声明Item加载器与声明Item类似,都是通过使用类定义语法。 这里是一个例子:

from scrapy.loader import ItemLoader

from scrapy.loader.processors import TakeFirst, MapCompose, Join

class ProductLoader(ItemLoader):

default_output_processor = TakeFirst()

name_in = MapCompose(unicode.title)

name_out = Join()

price_in = MapCompose(unicode.strip)

# ...

如您所见,输入处理器是使用_in后缀声明的,而输出处理器是使用_out后缀声明的。 您还可以使用ItemLoader.default_input_processor和ItemLoader.default_output_processor属性声明默认的输入/输出处理器。

声明输入和输出处理器¶

如前一节所述,可以在Item Loader定义中声明输入和输出处理器,以这种方式声明输入处理器是很常见的。 您还可以在 Item字段元数据中指定要使用的输入和输出处理器。 这里是一个例子:

import scrapy

from scrapy.loader.processors import Join, MapCompose, TakeFirst

from w3lib.html import remove_tags

def filter_price(value):

if value.isdigit():

return value

class Product(scrapy.Item):

name = scrapy.Field(

input_processor=MapCompose(remove_tags),

output_processor=Join(),

)

price = scrapy.Field(

input_processor=MapCompose(remove_tags, filter_price),

output_processor=TakeFirst(),

)

>>> from scrapy.loader import ItemLoader

>>> il = ItemLoader(item=Product())

>>> il.add_value('name', [u'Welcome to my', u'<strong>website</strong>'])

>>> il.add_value('price', [u'€', u'<span>1000</span>'])

>>> il.load_item()

{'name': u'Welcome to my website', 'price': u'1000'}

输入和输出处理器的优先顺序如下:

- Item加载器字段特定的属性:

field_in和field_out(最优先) - 字段元数据(

input_processor和output_processor键) - Item加载器默认值:

ItemLoader.default_input_processor()和ItemLoader.default_output_processor()(最低优先级)

另见:重用和扩展Item加载器。

Item加载器上下文¶

Item加载器上下文是Item加载器中所有输入和输出处理器共享的任意键/值的字典。 它可以在声明,实例化或使用Item加载器时传递。 它们用于修改输入/输出处理器的行为。

例如,假设您有一个函数parse_length,它接收一个文本值并从中提取文本长度:

def parse_length(text, loader_context):

unit = loader_context.get('unit', 'm')

# ... length parsing code goes here ...

return parsed_length

通过接受一个loader_context参数,该函数明确告诉Item加载器它能够接收Item加载器上下文,因此Item加载器在调用它时传递当前活动的上下文,以便处理器函数(本例中为 parse_length)可以使用它们。

有几种方法可以修改Item加载器上下文值:

通过修改当前活动的Item加载器上下文(

context属性):loader = ItemLoader(product) loader.context['unit'] = 'cm'

在Item Loader实例化时(Item加载器构造函数的关键字参数存储在Item Loader上下文中):

loader = ItemLoader(product, unit='cm')在Item Loader声明中,对于那些支持用Item Loader上下文实例化的输入/输出处理器。

MapCompose就是其中之一:class ProductLoader(ItemLoader): length_out = MapCompose(parse_length, unit='cm')

ItemLoader对象¶

-

class

scrapy.loader.ItemLoader([item, selector, response, ]**kwargs)¶ 返回一个新的Item Loader来填充给定的Item。 如果没有给出Item,则使用

default_item_class中的类自动实例化。当使用selector或response参数实例化时,

ItemLoader类提供了使用selectors从网页中提取数据的方便机制。参数: - item(

Item对象) - 填入Item实例随后调用add_xpath(),add_css()或add_value()。 - selector(

Selector对象) - 提取数据的选择器,使用add_xpath()(或add_css())或replace_xpath()(或replace_css())方法。 - response(

Response对象) - response使用default_selector_class构造选择器,当selector参数给定时,response参数被忽略。

Item,Selector,Response和其余关键字参数被分配给Loader上下文(可通过

context属性访问)。ItemLoader实例具有以下方法:-

get_value(value, *processors, **kwargs)¶ 通过给定的

processors和关键字参数处理给定的value。可用关键字参数:

参数: re (str 或 已编译的正则表达式) - 一个正则表达式,用于在处理器之前应用 extract_regex()方法从给定值中提取数据示例:

>>> from scrapy.loader.processors import TakeFirst >>> loader.get_value(u'name: foo', TakeFirst(), unicode.upper, re='name: (.+)') 'FOO`

-

add_value(field_name, value, *processors, **kwargs)¶ 处理,然后为给定字段添加给定的

value。value首先通过

get_value()传递给processors和kwargs,然后通过field input processor传递,结果附加到字段收集的数据中。 如果该字段已包含收集的数据,则添加新数据。给定的

field_name可以是None,在这种情况下,可以添加多个字段的值。 处理后的值应该是一个带有field_name映射值的字典。示例:

loader.add_value('name', u'Color TV') loader.add_value('colours', [u'white', u'blue']) loader.add_value('length', u'100') loader.add_value('name', u'name: foo', TakeFirst(), re='name: (.+)') loader.add_value(None, {'name': u'foo', 'sex': u'male'})

-

replace_value(field_name, value, *processors, **kwargs)¶ 与

add_value()类似,但用新值替换收集的数据,而不是添加它。

-

get_xpath(xpath, *processors, **kwargs)¶ 与

ItemLoader.get_value()类似,但接收XPath而不是value,该Xpath用于从与ItemLoader关联的选择器中提取unicode字符串列表。Parameters: - xpath(str) - 提取数据的XPath

- re (str 或 已编译的正则表达式) - 从选定的XPath区域提取数据的正则表达式

示例:

# HTML snippet: <p class="product-name">Color TV</p> loader.get_xpath('//p[@class="product-name"]') # HTML snippet: <p id="price">the price is $1200</p> loader.get_xpath('//p[@id="price"]', TakeFirst(), re='the price is (.*)')

-

add_xpath(field_name, xpath, *processors, **kwargs)¶ 与

ItemLoader.add_value()类似,但接收XPath而不是value,Xpath用于从与ItemLoader关联的选择器中提取unicode字符串列表。参考

get_xpath()为获取kwargs.Parameters: xpath (str) – 为提取数据的XPath 例子:

# HTML 片段: <p class="product-name">Color TV</p> loader.add_xpath('name', '//p[@class="product-name"]') # HTML 片段: <p id="price">the price is $1200</p> loader.add_xpath('price', '//p[@id="price"]', re='the price is (.*)')

-

replace_xpath(field_name, xpath, *processors, **kwargs)¶ 类似

add_xpath()但是会替换数据而非添加

-

get_css(css, *processors, **kwargs)¶ 类似

ItemLoader.get_value()接受一个可从列表中选择unicode字符串的css选择器替代特定的值ItemLoader.Parameters: - css (str) – 用于提取数据的CSS选择器

- re (str or compiled regex) – 用于从所选CSS区域提取数据的正则表达式

Examples:

# HTML snippet: <p class="product-name">Color TV</p> loader.get_css('p.product-name') # HTML snippet: <p id="price">the price is $1200</p> loader.get_css('p#price', TakeFirst(), re='the price is (.*)')

-

add_css(field_name, css, *processors, **kwargs)¶ 类似

ItemLoader.add_value()但是获取CSS选择器,代替一个用于提取从选择器连接的ItemLoaderunicode字符串列表的值See

get_css()forkwargs.Parameters: css (str) – the CSS selector to extract data from Examples:

# HTML snippet: <p class="product-name">Color TV</p> loader.add_css('name', 'p.product-name') # HTML snippet: <p id="price">the price is $1200</p> loader.add_css('price', 'p#price', re='the price is (.*)')

-

load_item()¶ 用目前收集的数据填充项目,然后返回。 收集到的数据首先通过output processors传递给每个项目字段以获取最终值。

-

nested_xpath(xpath)¶ 用xpath选择器创建一个嵌套的Loader。 提供的选择器与

ItemLoader关联的选择器应用是相对的。 嵌套的Loader与父ItemLoader共享Item,所以调用add_xpath(),add_value()replace_value()等将正常工作。

-

nested_css(css)¶ 用css选择器创建一个嵌套的Loader。 提供的选择器与

ItemLoader关联的选择器应用是相对的。 嵌套的Loader与父ItemLoader共享Item,所以调用add_xpath(),add_value()replace_value()等将正常工作。

-

get_collected_values(field_name)¶ 返回给定字段的收集值。

-

get_output_value(field_name)¶ 对于给定的字段,返回使用输出处理器分析的收集值。 此方法不能填充或修改Item。

-

get_input_processor(field_name)¶ 返回给定字段的输入处理器。

-

get_output_processor(field_name)¶ 返回给定字段的输出处理器。

ItemLoader实例具有以下属性:-

default_item_class¶ Item类(或工厂),用于在构造函数中未给出Item时,实例化Item。

-

default_input_processor¶ 默认输入处理器,用于没有指定输入处理器的字段。

-

default_output_processor¶ 默认输出处理器,用于那些没有指定输出处理器的字段。

-

default_selector_class¶ 如果在构造函数中只给出response,则该类用于构造

ItemLoader的selector。 如果在构造函数中给出了选择器,则忽略此属性。 该属性有时在子类中被覆盖。

-

selector¶ 要从中提取数据的

Selector对象。 它可以是构造函数中给出的,也可以是有response的构造函数使用default_selector_class创建的选择器。 该属性是只读的。

- item(

嵌套Loader¶

从文档的子部分解析相关值时,创建嵌套的Loader可能很有用。 想象一下,您正在从页面的页脚中提取详细信息,如下所示:

例:

<footer>

<a class="social" href="https://facebook.com/whatever">Like Us</a>

<a class="social" href="https://twitter.com/whatever">Follow Us</a>

<a class="email" href="mailto:whatever@example.com">Email Us</a>

</footer>

没有嵌套的Loader,你需要为你想要提取的每个值指定完整的xpath(或css)。

例:

loader = ItemLoader(item=Item())